2 Deploying Llms Using Truefoundry

Deploying Llms At Scale Truefoundry Deploying open source large language models (llms) at scale while ensuring reliability, low latency, and cost effectiveness can be a challenging endeavor. In this video, we're diving into how truefoundry simplifies this entire process, making llm deployment a breeze.key highlights:curated model catalogue:explor.

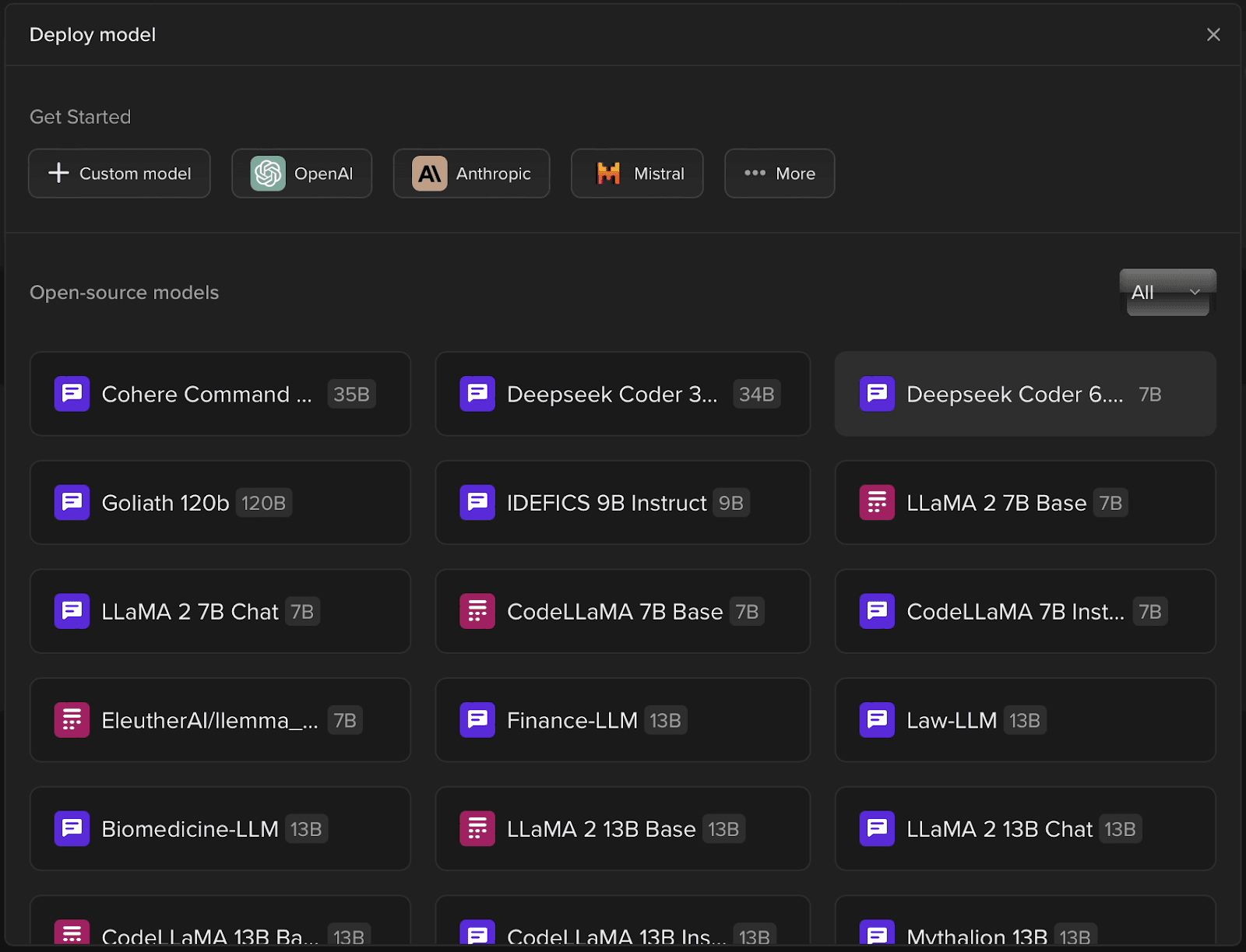

Deploying Llms At Scale Truefoundry Truefoundry simplifies the process of deploying llm by automaticaly figuring out the most optimal way of deploying any llm and configuring the correct set of gpus. we also enable model caching by default and make it really easy to configure autoscaling based on your needs. Deploying llms at scale while ensuring reliability, low latency, and cost effectiveness can be challenging. this outlines architecture and approach to take llm to production truefoundry blog. Discover how software architects and leaders can deploy and optimize open source language models like phi 3 and llama 3 on premises. this comprehensive guide covers hardware selection, rag architectures, security, and production ready deployment strategies for enterprise ai. On premise llms give organizations full ownership of the stack, greater customization, and predictable costs, making them ideal for long term ai strategies. with platforms like truefoundry, deploying and scaling llms internally becomes faster, more efficient, and easier to manage.

Deploying Llms As An Api Endpoint With Tune Studio Discover how software architects and leaders can deploy and optimize open source language models like phi 3 and llama 3 on premises. this comprehensive guide covers hardware selection, rag architectures, security, and production ready deployment strategies for enterprise ai. On premise llms give organizations full ownership of the stack, greater customization, and predictable costs, making them ideal for long term ai strategies. with platforms like truefoundry, deploying and scaling llms internally becomes faster, more efficient, and easier to manage. Find comprehensive code and documentation to start deploying with truefoundry. subscribe to our weekly newsletter to get latest updates in your inbox. meet other developers and connect with our team. responses are generated using ai and may contain mistakes. Truefoundry offers an intuitive solution for llm deployment and fine tuning. with our model catalogue, companies can self host llms on kubernetes, reducing inference costs by 10x in just one click. discover how to deploy a dolly v2 3b model and fine tune a pythia 70m using truefoundry in our blog. As with most tools of its kind, truefoundry provides all the documentation and tutorials you might need to install and start using truefoundry llmops with ease. we definitely suggest that you begin there, but we will provide a quick setup guide below. Yes. truefoundry is designed for flexibility. you can deploy the llmops platform on your own cloud (aws, gcp, azure), in a private vpc, on premise, or even in air gapped environments—ensuring data control and compliance from day one.

Deploying Llms As An Api Endpoint With Tune Studio Find comprehensive code and documentation to start deploying with truefoundry. subscribe to our weekly newsletter to get latest updates in your inbox. meet other developers and connect with our team. responses are generated using ai and may contain mistakes. Truefoundry offers an intuitive solution for llm deployment and fine tuning. with our model catalogue, companies can self host llms on kubernetes, reducing inference costs by 10x in just one click. discover how to deploy a dolly v2 3b model and fine tune a pythia 70m using truefoundry in our blog. As with most tools of its kind, truefoundry provides all the documentation and tutorials you might need to install and start using truefoundry llmops with ease. we definitely suggest that you begin there, but we will provide a quick setup guide below. Yes. truefoundry is designed for flexibility. you can deploy the llmops platform on your own cloud (aws, gcp, azure), in a private vpc, on premise, or even in air gapped environments—ensuring data control and compliance from day one.

Comments are closed.