21 Effect Of Feature Scaling On Gradient Based Learning Download Scientific Diagram

Unit 4 Gradient Learning Download Free Pdf Artificial Neural Network Computational Science Download scientific diagram | 21: effect of feature scaling on gradient based learning. from publication: detection and quantification of rot in harvested trees using. This comprehensive empirical study investigated the impact of 12 feature scaling techniques on 14 machine learning algorithms in 16 classification and regression datasets.

21 Effect Of Feature Scaling On Gradient Based Learning Download Scientific Diagram Feature scaling is a critical preprocessing step in machine learning that can dramatically affect the performance of your models. by understanding and applying the appropriate scaling techniques, you can improve both the speed and accuracy of your machine learning algorithms. From this article, it says: we can speed up gradient descent by scaling. this is because θ will descend quickly on small ranges and slowly on large ranges, and so will oscillate inefficiently down to the optimum when the variables are very uneven. Feature scaling is important in gradient descent because it ensures that all features contribute equally to the model’s learning process. here’s why it matters: 1. faster convergence . On the left are shown the two features on the same scale that reach the target faster, while on the right the two features have different scales and take longer to reach the target. this.

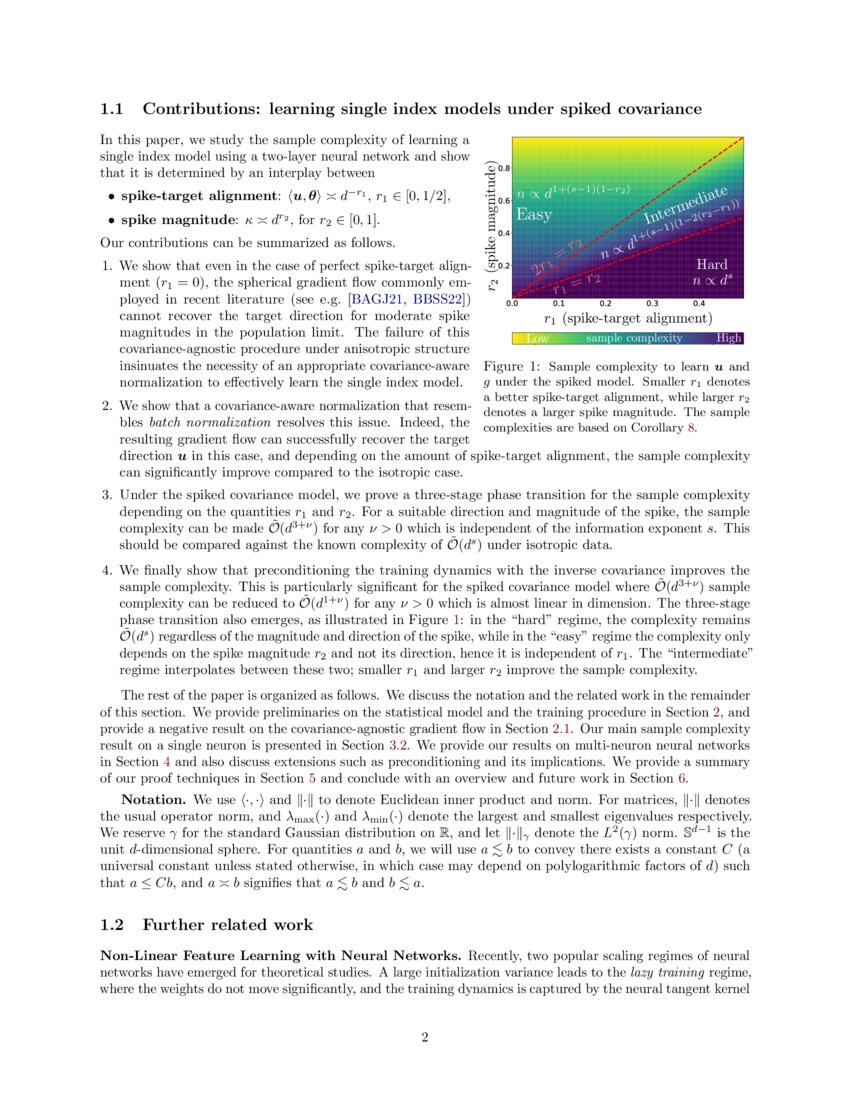

Gradient Based Feature Learning Under Structured Data Deepai Feature scaling is important in gradient descent because it ensures that all features contribute equally to the model’s learning process. here’s why it matters: 1. faster convergence . On the left are shown the two features on the same scale that reach the target faster, while on the right the two features have different scales and take longer to reach the target. this. When we scale the inputs, we reduce the curvature, which makes methods that ignore curvature (like gradient descent) work much better. when the error surface is circular (spherical), the gradient points right at the minimum, so learning is easy. This extensive empirical analysis, with all source code, experimental results, and model parameters made publicly available to ensure complete transparency and repro ducibility, offers model specific crucial guidance to practitioners on the need for an optimal selection of feature scaling techniques. In this blog, we will discuss one of the feature transformation techniques called feature scaling with examples and see how it will be the game changer for our machine learning model accuracy. This extensive empirical analysis, with all source code, experimental results, and model parameters made publicly available to ensure complete transparency and reproducibility, offers model specific crucial guidance to practitioners on the need for an optimal selection of feature scaling techniques.

Comments are closed.