Amd Instinct Mi300a Vs Nvidia H100 Servethehome

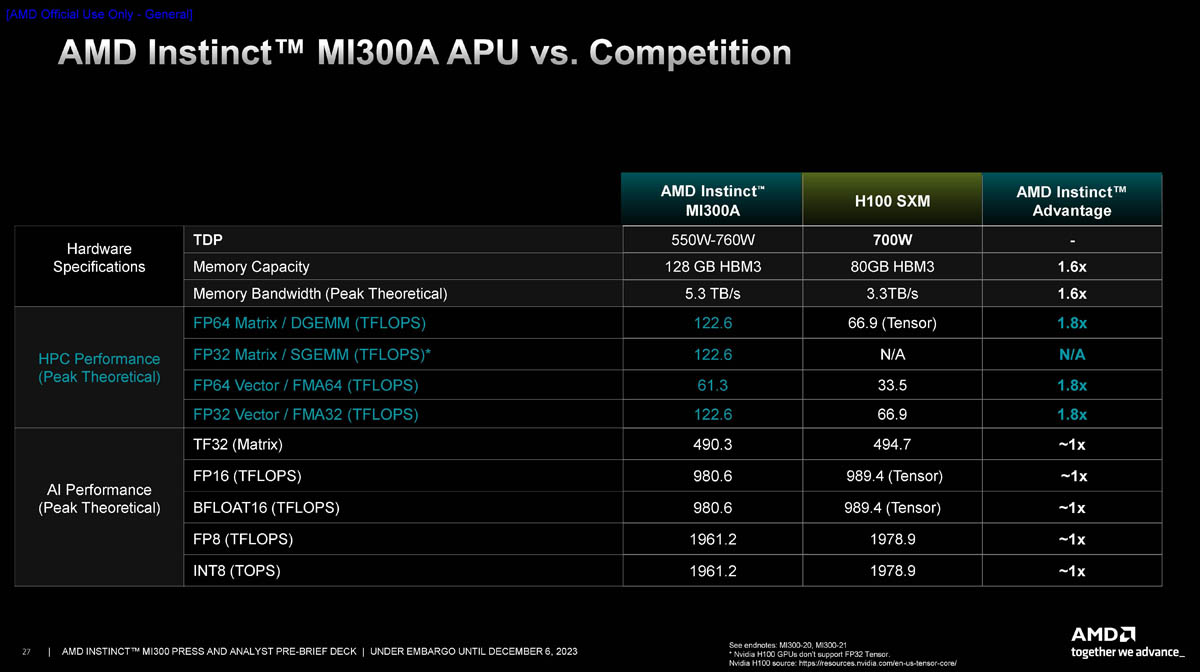

Amd Instinct Mi300a Vs Nvidia H100 Servethehome Servethehome is the it professional's guide to servers, storage, networking, and high end workstation hardware, plus great open source projects. advertise on sth. Ai gpu we compared a gpu: 80gb vram h100 pcie and a professional market gpu: 128gb vram amd instinct mi300a to see which gpu has better performance in key specifications, benchmark tests, power consumption, etc.

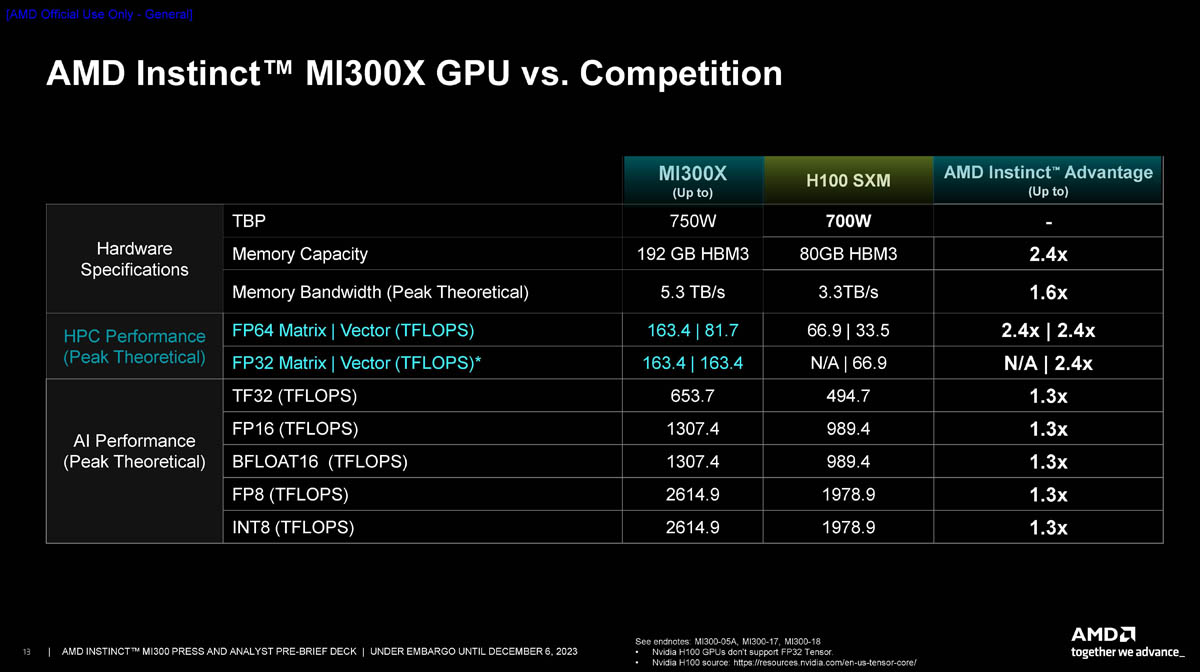

Amd Instinct Mi300x Vs Nvidia H100 Servethehome Amd's mi300x was tested by chips and cheese, looking at many low level performance metrics and comparing the chip with rival nvidia h100 in compute throughput and cache intensive benchmarks. Comparison of the technical characteristics between the graphics cards, with nvidia h100 pcie 80gb on one side and dual amd radeon instinct mi300a on the other side, also their respective performances with the benchmarks. We delve into the new amd instinct mi300x gpu, mi300a apu, and see how amd has built packages to go head to head with the nvidia h100 and win. We compared two gpus: h100 pcie and instinct mi300a in specifications and benchmarks. you will find out which gpu has better performance, benchmark tests, specifications, power consumption and more.

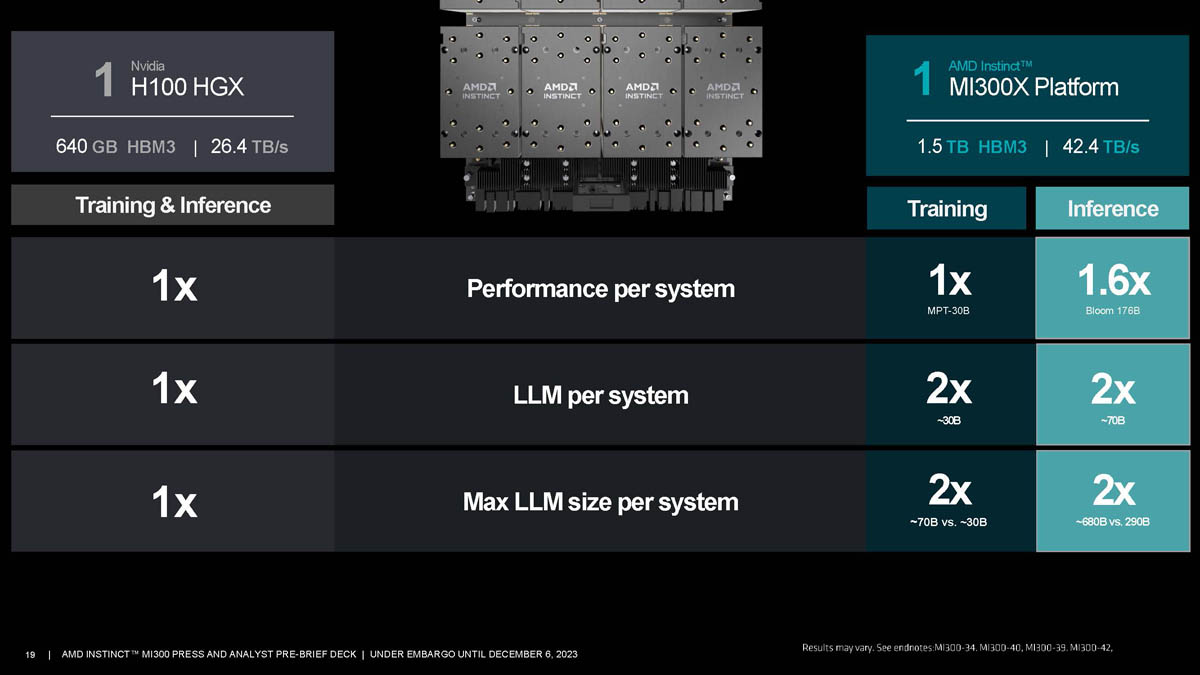

Amd Instinct Mi300x Vs Nvidia H100 Performance Summary Servethehome We delve into the new amd instinct mi300x gpu, mi300a apu, and see how amd has built packages to go head to head with the nvidia h100 and win. We compared two gpus: h100 pcie and instinct mi300a in specifications and benchmarks. you will find out which gpu has better performance, benchmark tests, specifications, power consumption and more. Ai gpu we compared a professional market gpu: 128gb vram radeon instinct mi300 and a gpu: 80gb vram h100 pcie to see which gpu has better performance in key specifications, benchmark tests, power consumption, etc. The answer might be a vendor lock in with a cloud provider or nvidia’s push to sell full stack solutions like ibm z. in either case, with almost a decade using arm servers, hearing oems and customers in the market with them, and watching the dynamics play out, i have become less confident that this cycle fixes itself because of the pull of. Amd says its instinct mi300x gpu delivers up to 1.6x more performance than the nidia h100 in ai inference workloads and offers similar performance in training work, thus providing the. Ai gpu we compared a professional market gpu: 128gb vram amd instinct mi300a and a gpu: 80gb vram h100 pcie to see which gpu has better performance in key specifications, benchmark tests, power consumption, etc.

Amd Instinct Mi300x Vs Nvidia H100 Servethehome Ai gpu we compared a professional market gpu: 128gb vram radeon instinct mi300 and a gpu: 80gb vram h100 pcie to see which gpu has better performance in key specifications, benchmark tests, power consumption, etc. The answer might be a vendor lock in with a cloud provider or nvidia’s push to sell full stack solutions like ibm z. in either case, with almost a decade using arm servers, hearing oems and customers in the market with them, and watching the dynamics play out, i have become less confident that this cycle fixes itself because of the pull of. Amd says its instinct mi300x gpu delivers up to 1.6x more performance than the nidia h100 in ai inference workloads and offers similar performance in training work, thus providing the. Ai gpu we compared a professional market gpu: 128gb vram amd instinct mi300a and a gpu: 80gb vram h100 pcie to see which gpu has better performance in key specifications, benchmark tests, power consumption, etc.

Comments are closed.