Build Your First Eval Creating A Custom Llm Evaluator With An Evaluation Benchmark Dataset

Github Thaoquynh0603 Llm Eval Custom Dataset Building an evaluation from the ground up requires iteration and testing. in this video, we walk through how to use arize phoenix to create a benchmark datas. Learn how to build a custom llm as a judge evaluator by creating a benchmark dataset tailored to your use case, enabling rigorous evaluation beyond standard templates. phoenix ctrl k.

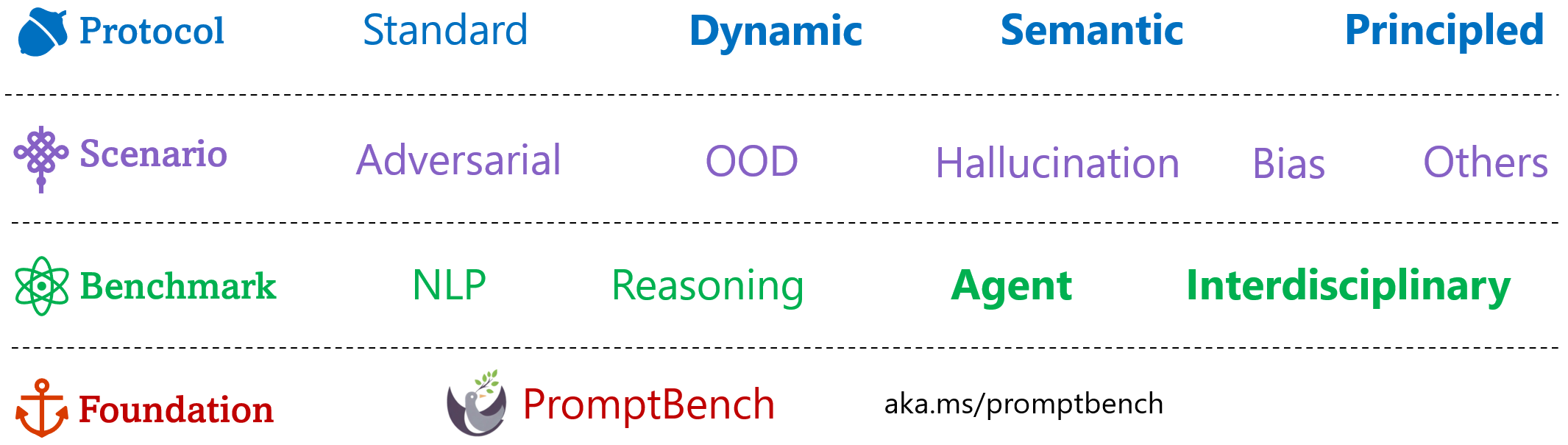

Llm Evaluator Reliability Eval Prompt Py At Main Pku Onelab Llm Evaluator Reliability Github To build your own prompt based large language model evaluator or ai assisted annotator, you can create a custom evaluator based on a prompty file. prompty is a file with .prompty extension for developing prompt template. the prompty asset is a markdown file with a modified front matter. To do this, you put together a dedicated llm based eval whose only task is to label data as effectively as a human labeled your “golden dataset.” you then benchmark your metric against that. Just provide your data in json format and specify your eval parameters in yaml. build eval.md walks you through these steps, and you can supplement these instructions with the jupyter notebooks in the examples folder to help you get started quickly. The video explains how to build and evaluate custom benchmarks using two key tools: yourbench for dataset creation and lighteval for model evaluation. creating custom benchmarks with yourbench yourbench is a huggingface library that generates question answer pairs from input documents.

Code Llm Evaluation Just provide your data in json format and specify your eval parameters in yaml. build eval.md walks you through these steps, and you can supplement these instructions with the jupyter notebooks in the examples folder to help you get started quickly. The video explains how to build and evaluate custom benchmarks using two key tools: yourbench for dataset creation and lighteval for model evaluation. creating custom benchmarks with yourbench yourbench is a huggingface library that generates question answer pairs from input documents. And now we can instantiate and make our custom eval chain with a proper name of inputs and etc. In deepeval, anyone can easily build their own custom llm evaluation metric that is automatically integrated within deepeval 's ecosystem, which includes: running your custom metric in ci cd pipelines. taking advantage of deepeval 's capabilities such as metric caching and multi processing. Below is a simple example that gives an quick overview of how mlflow llm evaluation works. the example builds a simple question answering model by wrapping "openai gpt 4" with custom prompt. you can paste it to your ipython or local editor and execute it, and install missing dependencies as prompted. If you've ever wondered how to make sure an llm performs well on your specific task, this guide is for you! it covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience.

Path To Production Unlock The Power Of Llm Evaluation Observability With Arize S Expert Led And now we can instantiate and make our custom eval chain with a proper name of inputs and etc. In deepeval, anyone can easily build their own custom llm evaluation metric that is automatically integrated within deepeval 's ecosystem, which includes: running your custom metric in ci cd pipelines. taking advantage of deepeval 's capabilities such as metric caching and multi processing. Below is a simple example that gives an quick overview of how mlflow llm evaluation works. the example builds a simple question answering model by wrapping "openai gpt 4" with custom prompt. you can paste it to your ipython or local editor and execute it, and install missing dependencies as prompted. If you've ever wondered how to make sure an llm performs well on your specific task, this guide is for you! it covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience.

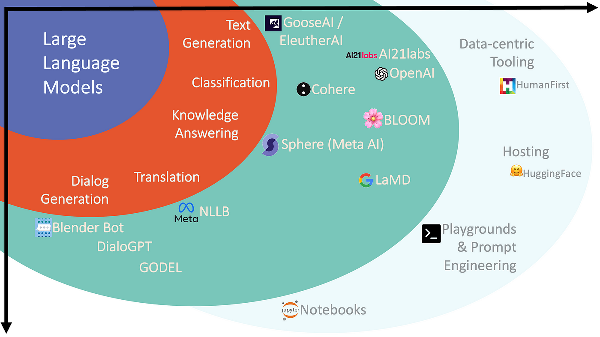

Breaking Barriers Meta S Innovative Llm Evaluator Fusion Chat Below is a simple example that gives an quick overview of how mlflow llm evaluation works. the example builds a simple question answering model by wrapping "openai gpt 4" with custom prompt. you can paste it to your ipython or local editor and execute it, and install missing dependencies as prompted. If you've ever wondered how to make sure an llm performs well on your specific task, this guide is for you! it covers the different ways you can evaluate a model, guides on designing your own evaluations, and tips and tricks from practical experience.

Comments are closed.