Demystifying Local Deployment Of Llms My Short Tutorial

Demystifying Llms Pdf Machine Learning Teaching Methods Materials Despite their growing prevalence, the operational mechanisms of llms remain a mystery for many, myself included. Running llms locally offers several advantages including privacy, offline access, and cost efficiency. this repository provides step by step guides for setting up and running llms using various frameworks, each with its own strengths and optimization techniques.

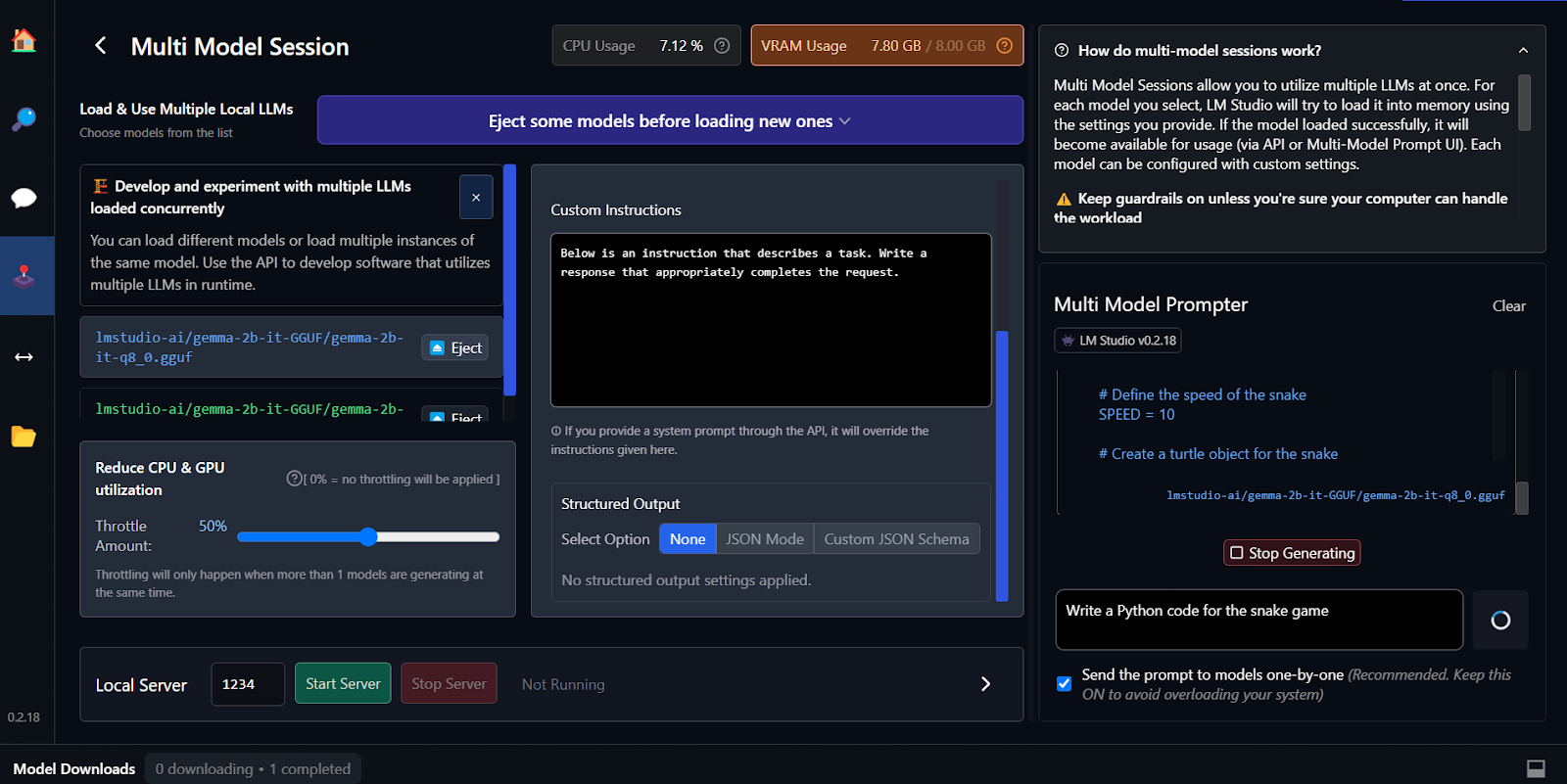

Demystifying Local Deployment Of Llms My Short Tutorial In this guide, we’ll explore how to run an llm locally, covering hardware requirements, installation steps, model selection, and optimization techniques. whether you’re a researcher, developer, or ai enthusiast, this guide will help you set up and deploy an llm on your local machine efficiently. why run an llm locally?. Now docker just released their model runner and this is a complete game changer for running models locally. now, just like ollama, you can manage, run and deploy models locally with openai. This guide breaks down the essential tools, integration strategies, and best practices for making local llms work within your existing environment. the developer advantage: tools that lower the. This guide is crafted to demystify the setup process of llms on your machine by providing clear, step by step instructions, insights on different python libraries and tools, and practical tips,.

How Llms Are Built Pdf This guide breaks down the essential tools, integration strategies, and best practices for making local llms work within your existing environment. the developer advantage: tools that lower the. This guide is crafted to demystify the setup process of llms on your machine by providing clear, step by step instructions, insights on different python libraries and tools, and practical tips,. Using large language models (llms) on local systems is becoming increasingly popular thanks to their improved privacy, control, and reliability. sometimes, these models can be even more accurate and faster than chatgpt. There are 16 tools to run and interact with your local llms, all given below. we will be taking a look at all these tools for local llms. explore as many of them as you can. Whether the interest is in privacy, experimentation, or offline capabilities, this guide covers everything needed to set up llms locally—especially if you are just getting started. So, you're probably wondering, "can i actually run an llm on my local workstation?". the good news is that you likely can do so if you have a relatively modern laptop or desktop! however, some hardware considerations can significantly impact the speed of prompt answering and overall performance.

Run Llms Locally 7 Simple Methods Datacamp Using large language models (llms) on local systems is becoming increasingly popular thanks to their improved privacy, control, and reliability. sometimes, these models can be even more accurate and faster than chatgpt. There are 16 tools to run and interact with your local llms, all given below. we will be taking a look at all these tools for local llms. explore as many of them as you can. Whether the interest is in privacy, experimentation, or offline capabilities, this guide covers everything needed to set up llms locally—especially if you are just getting started. So, you're probably wondering, "can i actually run an llm on my local workstation?". the good news is that you likely can do so if you have a relatively modern laptop or desktop! however, some hardware considerations can significantly impact the speed of prompt answering and overall performance.

Run Llms Locally 7 Simple Methods Datacamp Whether the interest is in privacy, experimentation, or offline capabilities, this guide covers everything needed to set up llms locally—especially if you are just getting started. So, you're probably wondering, "can i actually run an llm on my local workstation?". the good news is that you likely can do so if you have a relatively modern laptop or desktop! however, some hardware considerations can significantly impact the speed of prompt answering and overall performance.

Comments are closed.