Deploying Llms At Scale Truefoundry

Deploying Llms At Scale There are multiple options for model servers to host llm and various configuration parameters to tune to get the best performance for your use case. tgi, vllm, openllm are a few of the most common frameworks for hosting these llms. you can find a detailed analysis in this blog. There are multiple options for model servers to host llm and various configuration parameters to tune to get the best performance for your use case. tgi, vllm, openllm are a few of the most common frameworks for hosting these llms. you can find a detailed analysis in this blog.

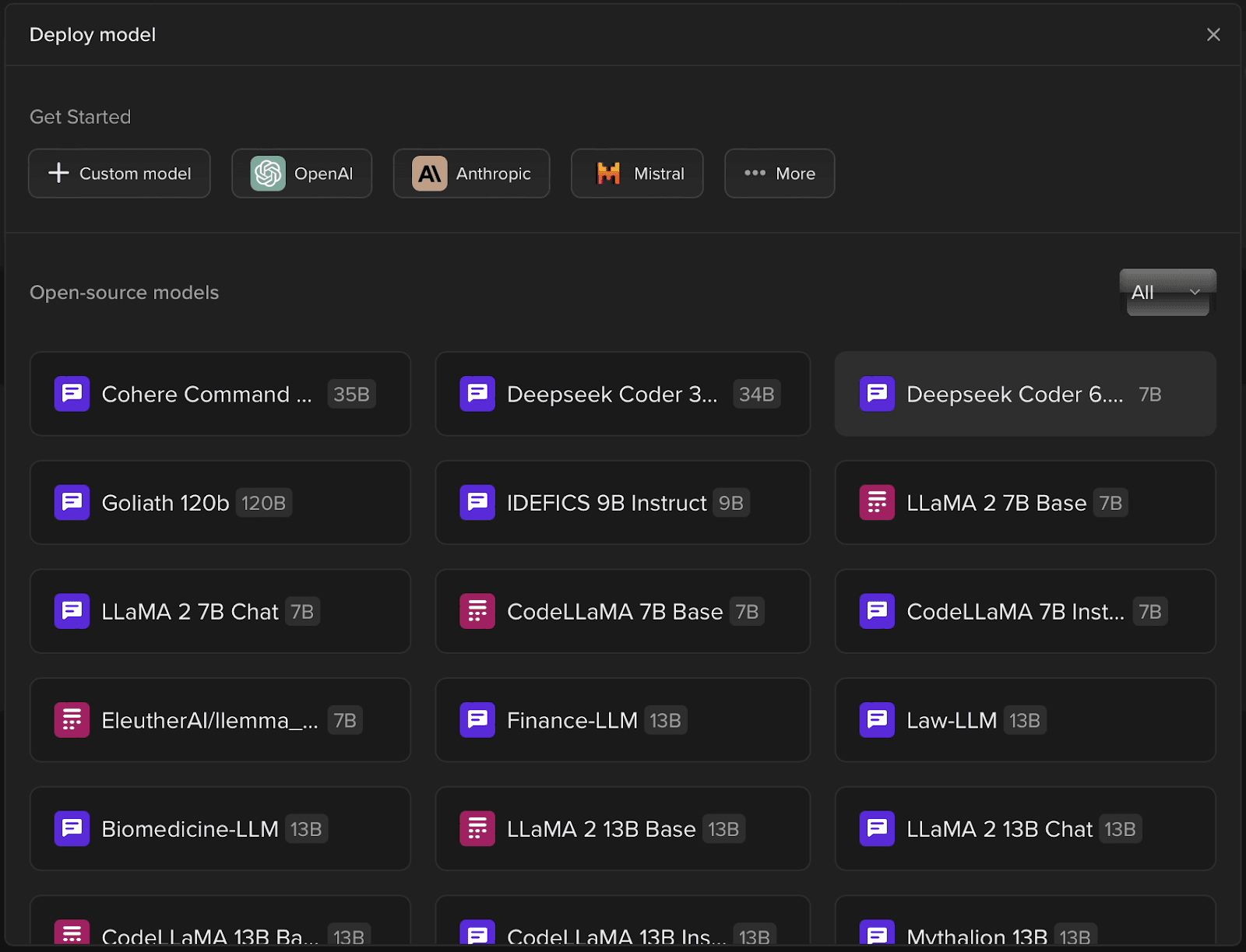

Deploying Llms At Scale Truefoundry Truefoundry simplifies the process of deploying llm by automaticaly figuring out the most optimal way of deploying any llm and configuring the correct set of gpus. we also enable model caching by default and make it really easy to configure autoscaling based on your needs. Large language models (llms) like gpt 4, claude, and llama 3 have transformed the way we interact with ai, powering applications from chatbots to code generation and search engines. however, deploying these massive models at scale presents significant engineering and operational challenges. as organizations aim to integrate llms into production systems, they must confront issues across compute. In this video, we're diving into how truefoundry simplifies this entire process, making llm deployment a breeze.key highlights:curated model catalogue:explor. But deploying llms in enterprise environments isn’t as simple as plugging into an api. it demands governance, observability, privacy safeguards, and tailored infrastructure.

Deploying Llms As An Api Endpoint With Tune Studio In this video, we're diving into how truefoundry simplifies this entire process, making llm deployment a breeze.key highlights:curated model catalogue:explor. But deploying llms in enterprise environments isn’t as simple as plugging into an api. it demands governance, observability, privacy safeguards, and tailored infrastructure. Find comprehensive code and documentation to start deploying with truefoundry. subscribe to our weekly newsletter to get latest updates in your inbox. meet other developers and connect with our team. responses are generated using ai and may contain mistakes. Truefoundry offers an intuitive solution for llm deployment and fine tuning. with our model catalogue, companies can self host llms on kubernetes, reducing inference costs by 10x in just one click. discover how to deploy a dolly v2 3b model and fine tune a pythia 70m using truefoundry in our blog. Truefoundry empowers enterprises to run secure, scalable, and high performance llms entirely within their own infrastructure. with prebuilt deployment pipelines, openai compatible apis, and full observability, you can take control of your genai strategy without vendor lock in or data risk. Truefoundry llmops is great for the deployment part of llms, as it allows them to deploy to the development stages as painlessly as possible. its deployment capabilities enable rapid scaling of models which can handle varying loads without impacting performance.

Open Source Library For Deploying Llms Regardless Of Hardware System R Autogpt Find comprehensive code and documentation to start deploying with truefoundry. subscribe to our weekly newsletter to get latest updates in your inbox. meet other developers and connect with our team. responses are generated using ai and may contain mistakes. Truefoundry offers an intuitive solution for llm deployment and fine tuning. with our model catalogue, companies can self host llms on kubernetes, reducing inference costs by 10x in just one click. discover how to deploy a dolly v2 3b model and fine tune a pythia 70m using truefoundry in our blog. Truefoundry empowers enterprises to run secure, scalable, and high performance llms entirely within their own infrastructure. with prebuilt deployment pipelines, openai compatible apis, and full observability, you can take control of your genai strategy without vendor lock in or data risk. Truefoundry llmops is great for the deployment part of llms, as it allows them to deploy to the development stages as painlessly as possible. its deployment capabilities enable rapid scaling of models which can handle varying loads without impacting performance.

Comments are closed.