Evaluating And Comparing Llms With Prompts In Label Studio

Strategies For Evaluating Llms Label Studio Learn how to evaluate llms using ground truth and model comparison workflows in label studio. improve accuracy, track cost, and scale labeling confidently. Discover how to compare llms in label studio to select the best model for your data labeling tasks. you’ve already discovered the power of using an llm for pre labeling your data, but how do you know which model is best for the job?.

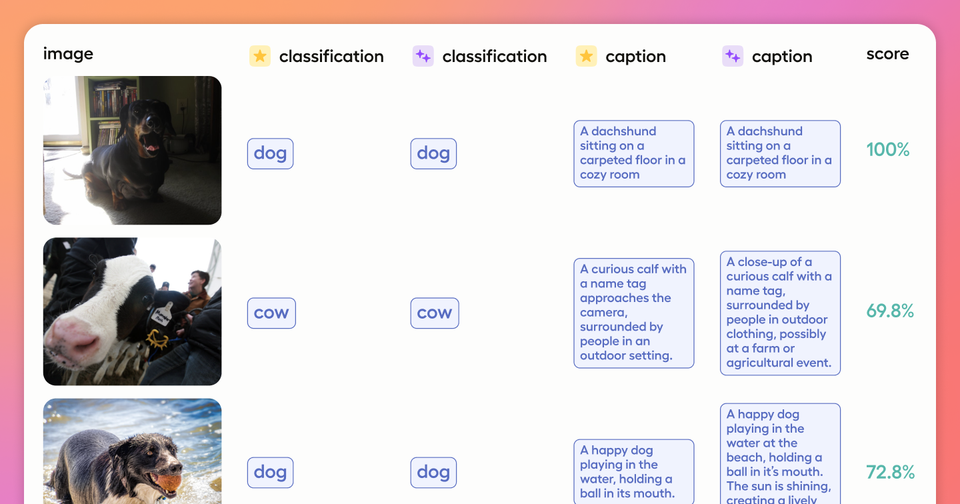

Strategies For Evaluating Llms Label Studio Live webinar: compare llms with prompts in label studio choosing the right large language model (llm) for your data labeling tasks can be a challenge. how do you know which model. This example demonstrates how to set up prompts to evaluate if the llm generated output text is classified as harmful, offensive, or inappropriate. create a new label studio project by importing text data of llm generated outputs. You’ve already discovered the power of using an llm for pre labeling your data, but how do you know which model is best for the job? join ml evangelist micae. In this template, we’ll show you how to populate the label studio tool with the outputs of each of the models you want to compare so that you can click on whichever answer is better.

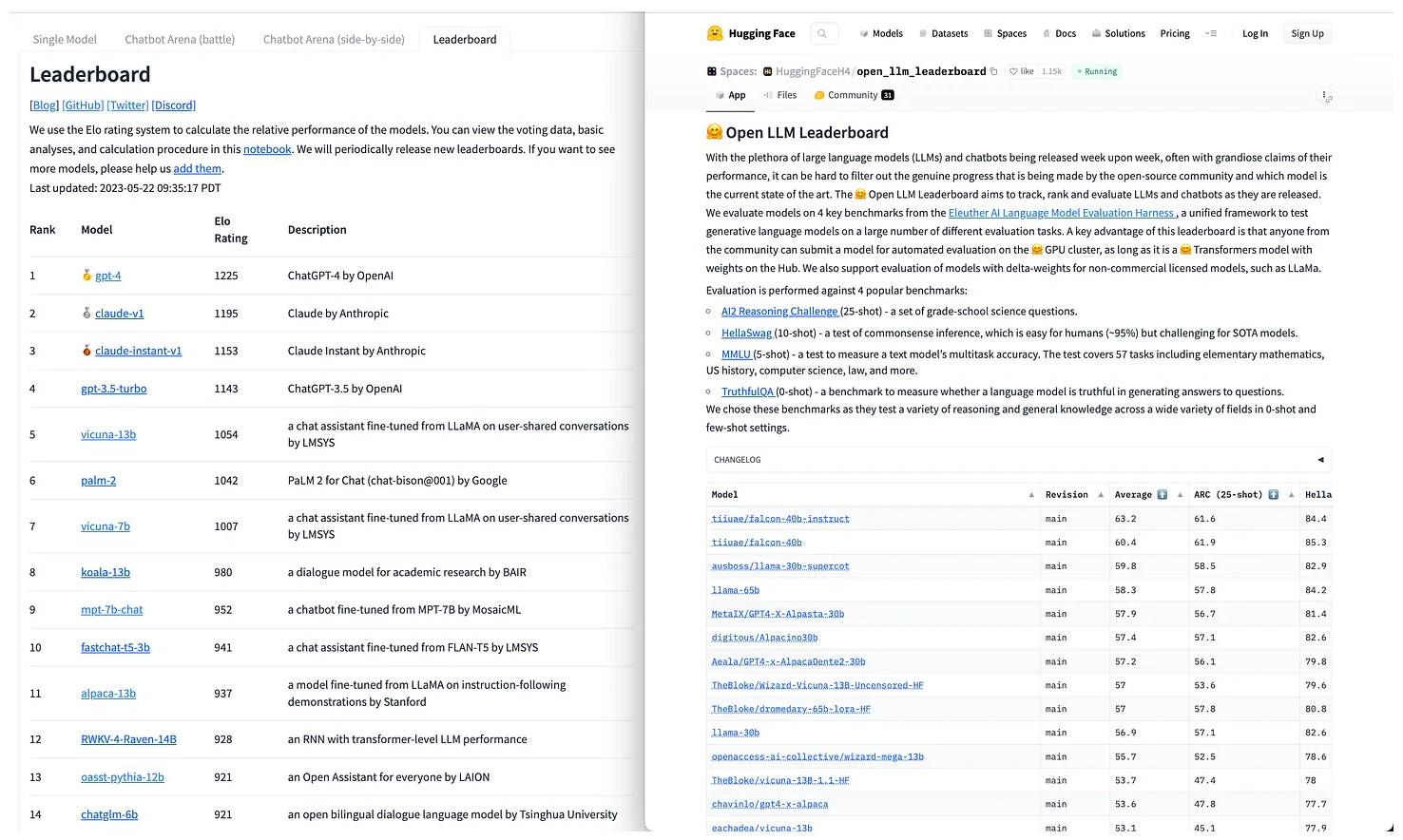

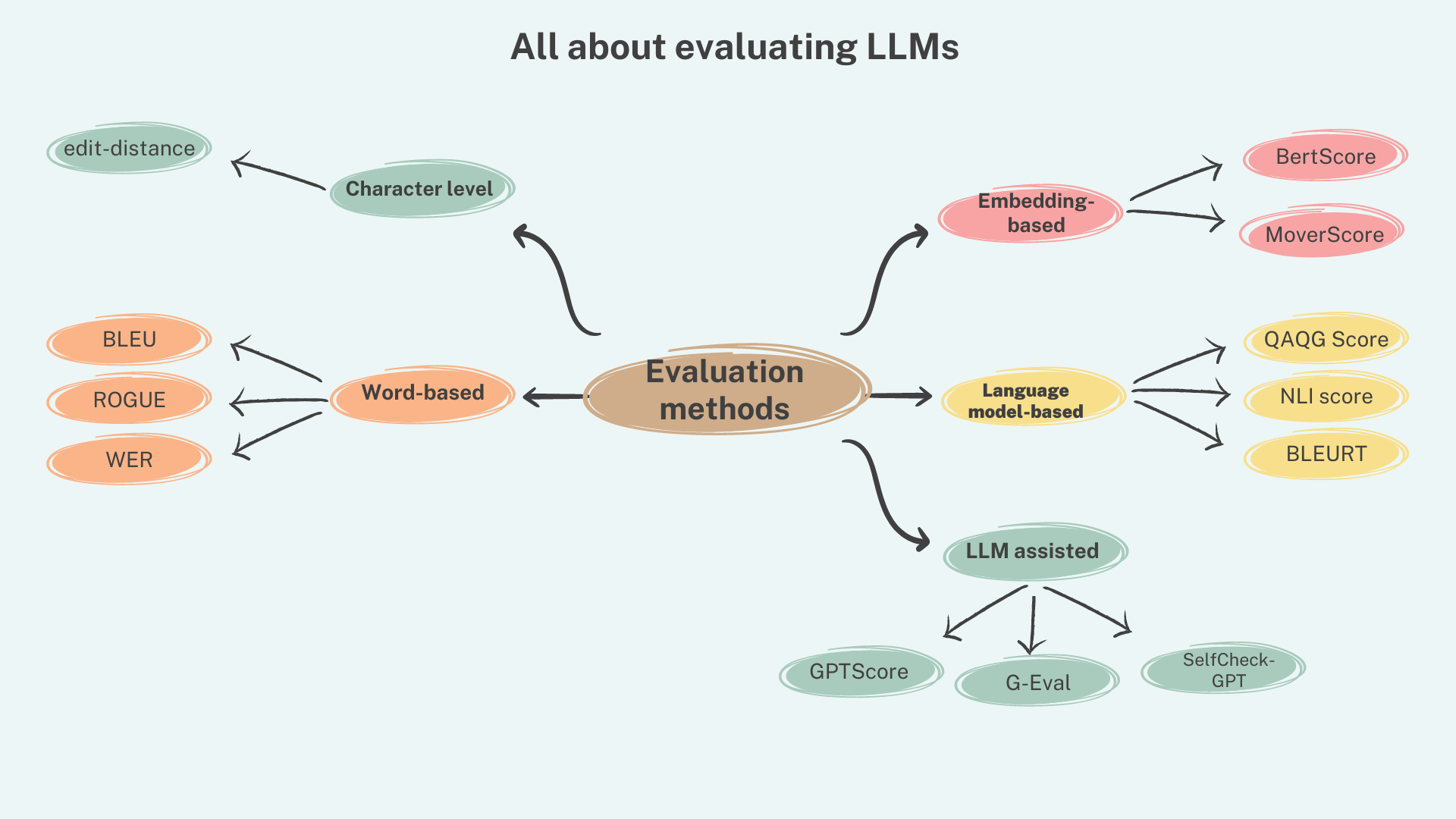

Evaluating Llms Effective Techniques For Improved Results You’ve already discovered the power of using an llm for pre labeling your data, but how do you know which model is best for the job? join ml evangelist micae. In this template, we’ll show you how to populate the label studio tool with the outputs of each of the models you want to compare so that you can click on whichever answer is better. Use prompts in label studio by humansignal to pre label data or compare and evaluate models. There are several strategies to evaluate llm responses, depending on the complexity of the system and specific evaluation goals. the simplest form of llm system evaluation is to moderate a single response generated by the llm. For evaluating which llm you’d like to use, there are two main methods: comparing llms on different benchmark tests and testing llms on ai leaderboard challenges. Use prompts to evaluate and refine your llm prompts and then generate predictions to automate your labeling process. all you need to get started is an llm deployment api key and a project.

Evaluating Llms At Detecting Errors In Llm Responses Use prompts in label studio by humansignal to pre label data or compare and evaluate models. There are several strategies to evaluate llm responses, depending on the complexity of the system and specific evaluation goals. the simplest form of llm system evaluation is to moderate a single response generated by the llm. For evaluating which llm you’d like to use, there are two main methods: comparing llms on different benchmark tests and testing llms on ai leaderboard challenges. Use prompts to evaluate and refine your llm prompts and then generate predictions to automate your labeling process. all you need to get started is an llm deployment api key and a project.

Evaluating Large Language Models Powerful Insights Ahead For evaluating which llm you’d like to use, there are two main methods: comparing llms on different benchmark tests and testing llms on ai leaderboard challenges. Use prompts to evaluate and refine your llm prompts and then generate predictions to automate your labeling process. all you need to get started is an llm deployment api key and a project.

Accelerate Labeling And Model Evaluation With Prompts Now Generally Available Humansignal

Comments are closed.