Evaluating Llms For Text To Sql Generation With Complex Sql Workload Limin Ma Ken Pu Ying Zhu 4min

Evaluating Llms For Text To Sql Generation With Complex Sql Workload Ai Research Paper Details This study presents a comparative analysis of the a complex sql benchmark, tpc ds, with two existing text to sql benchmarks, bird and spider. our findings reveal that tpc ds queries exhibit a significantly higher level of structural complexity compared to the other two benchmarks. View a pdf of the paper titled evaluating llms for text to sql generation with complex sql workload, by limin ma and ken pu and ying zhu.

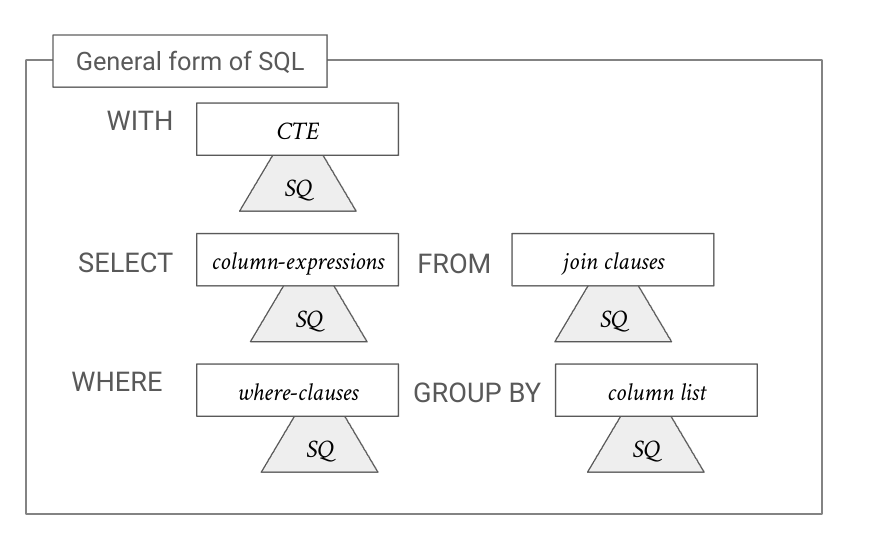

Evaluating Llms For Text To Sql With Prem Text2sql Evaluating llms for text to sql generation with complex sql workloadlimin ma, ken pu, ying zhuoriginal paper: arxiv.org abs 2407.19517this study pres. In an effort to evaluate the performance of llms on the task of generating sql queries from nl, two major benchmarks have been proposed: bird and spider. bird is a benchmark for text to sql generation that consists of over 12,000 nl questions and their corresponding sql queries. We utilized 11 distinct language models (llms) to generate sql queries based on the query descriptions provided by the tpc ds benchmark. The main problem that this paper attempts to solve is to evaluate and compare the performance of large language models (llms) in the task of generating complex sql queries, especially their ability when dealing with highly complex sql queries.

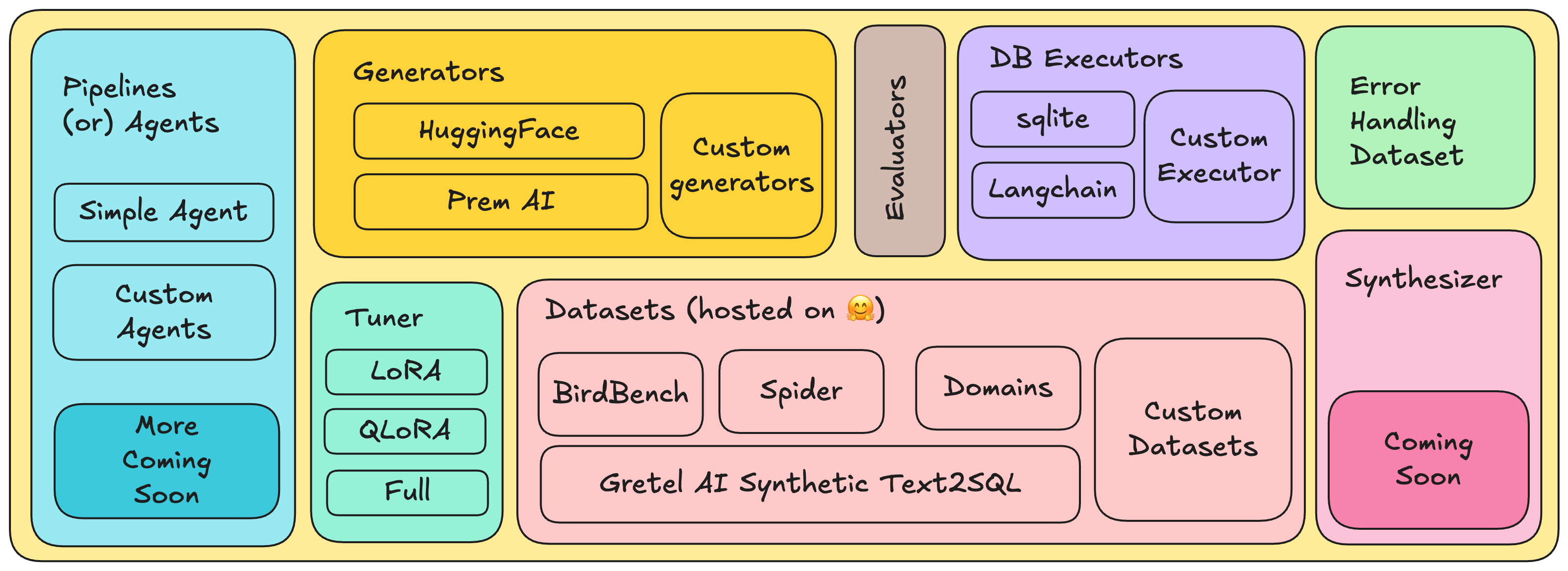

Evaluating Llms For Text To Sql With Prem Text2sql We utilized 11 distinct language models (llms) to generate sql queries based on the query descriptions provided by the tpc ds benchmark. The main problem that this paper attempts to solve is to evaluate and compare the performance of large language models (llms) in the task of generating complex sql queries, especially their ability when dealing with highly complex sql queries. We utilized 11 distinct language models (llms) to generate sql queries based on the query descriptions provided by the tpc ds benchmark. the prompt engineering process incorporated both the query description as outlined in the tpc ds specification and the database schema of tpc ds. To assess how well these llms perform, two main benchmarks have been introduced: bird and spider. bird consists of over 12,000 natural language questions and their corresponding sql queries, while spider contains around 10,000 questions mapped to 5,693 sql queries. The results of this study can guide future research in the development of more sophisticated text to sql benchmarks. we utilized 11 distinct language models (llms) to generate sql queries based on the query descriptions provided by the tpc ds benchmark. We evaluated and compared methods of enhancing the latest text to sql conversion techniques, focusing on the integration of intelligent agents and large language models (llms) for enterprise analysis.

Comments are closed.