Evolution Or Revolution The Ascent Of Multimodal Large Language Models Tokes Compare

Evolution Or Revolution The Ascent Of Multimodal Large Language Models Tokes Compare In the dynamic realm of artificial intelligence (ai), multimodal large language models (mllms) stand out as a potentially revolutionary development. with their exceptional precision, adaptability, and contextual awareness, they promise to reshape our understanding of ai capabilities. We also conduct a detailed analysis of these models across a wide range of tasks, including visual grounding, image generation and editing, visual understanding, and domain specific applications.

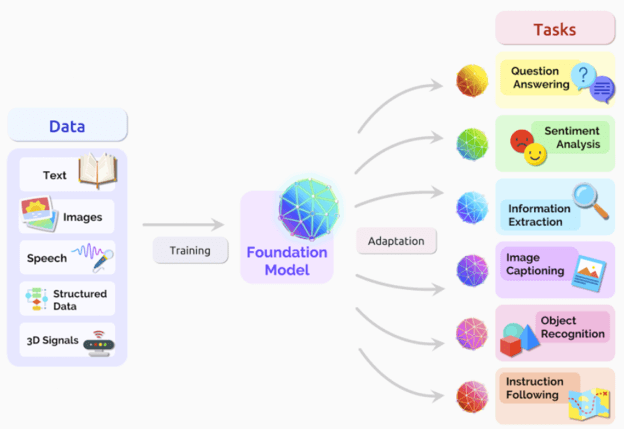

Evolution Or Revolution The Ascent Of Multimodal Large Language Models Tokes Compare For this reason, inspired by the success of large language models, significant research efforts are being devoted to the development of multimodal large language models (mllms). these models can seamlessly integrate visual and textual modalities, while providing a dialogue based interface and instruction following capabilities. A practical guide is provided, offering insights into the technical aspects of multimodal models. moreover, we present a compilation of the latest algorithms and commonly used datasets, providing researchers with valuable resources for experimentation and evaluation. The development of multimodal large language models (mllms) entails merging single modality architectures for vision and language, establishing effective connections between them through vision to language adapters, and devising innovative training approaches. Recently, the multimodal large language model (mllm) represented by gpt 4v has been a new rising research hotspot, which uses powerful large language models (llms) as a brain to perform multimodal tasks.

From Large Language Models To Large Multimodal Models Datafloq The development of multimodal large language models (mllms) entails merging single modality architectures for vision and language, establishing effective connections between them through vision to language adapters, and devising innovative training approaches. Recently, the multimodal large language model (mllm) represented by gpt 4v has been a new rising research hotspot, which uses powerful large language models (llms) as a brain to perform multimodal tasks. This paper presents the first survey on multimodal large language models (mllms), highlighting their potential as a path to artificial general intelligence. Timodal large language models (mllms). these models can seamlessly integrate visual and textual modalities, both as input and out put, while providing a dialogue based interface and instruction following capabilities. in this paper, we provide a comprehensive review of recent visual based mllms, analyzing their architectural choices, multimodal. As science and technology progress, the pervasive adoption of large language models and multi modal languages across diverse domains is poised to proliferate, facilitating the execution of a myriad of distinct tasks. Multimodal large language models (mllms) integrate visual and textual modalities, both as input and output dialogue based interface and instruction following capabilities.

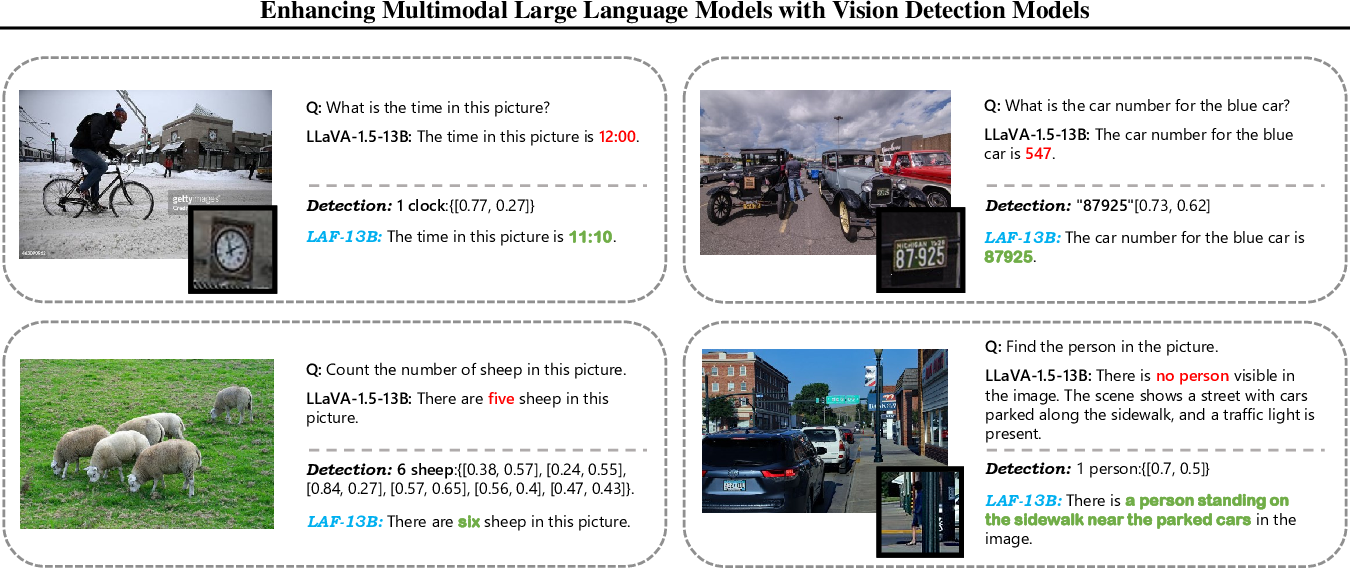

Enhancing Multimodal Large Language Models With Vision Detection Models An Empirical Study This paper presents the first survey on multimodal large language models (mllms), highlighting their potential as a path to artificial general intelligence. Timodal large language models (mllms). these models can seamlessly integrate visual and textual modalities, both as input and out put, while providing a dialogue based interface and instruction following capabilities. in this paper, we provide a comprehensive review of recent visual based mllms, analyzing their architectural choices, multimodal. As science and technology progress, the pervasive adoption of large language models and multi modal languages across diverse domains is poised to proliferate, facilitating the execution of a myriad of distinct tasks. Multimodal large language models (mllms) integrate visual and textual modalities, both as input and output dialogue based interface and instruction following capabilities.

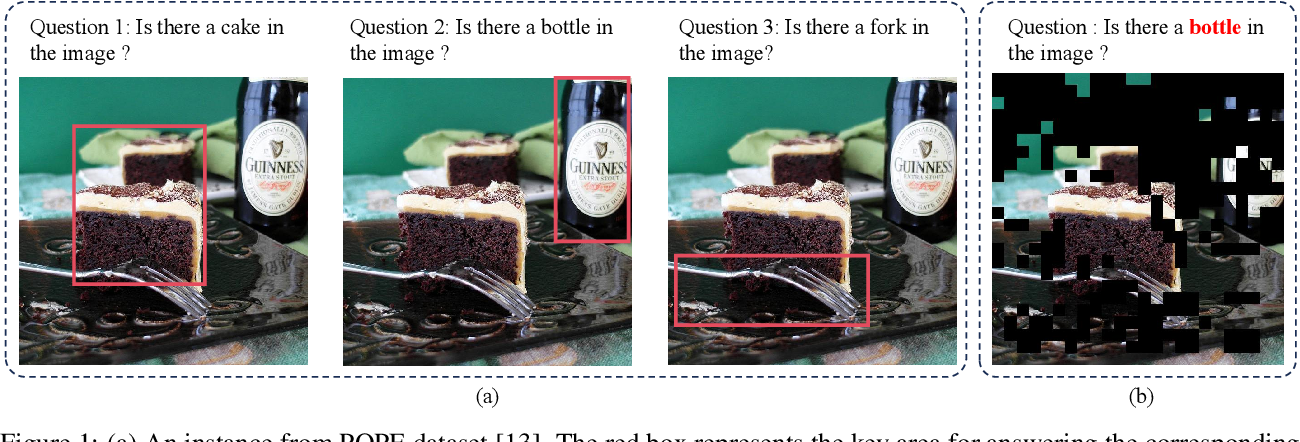

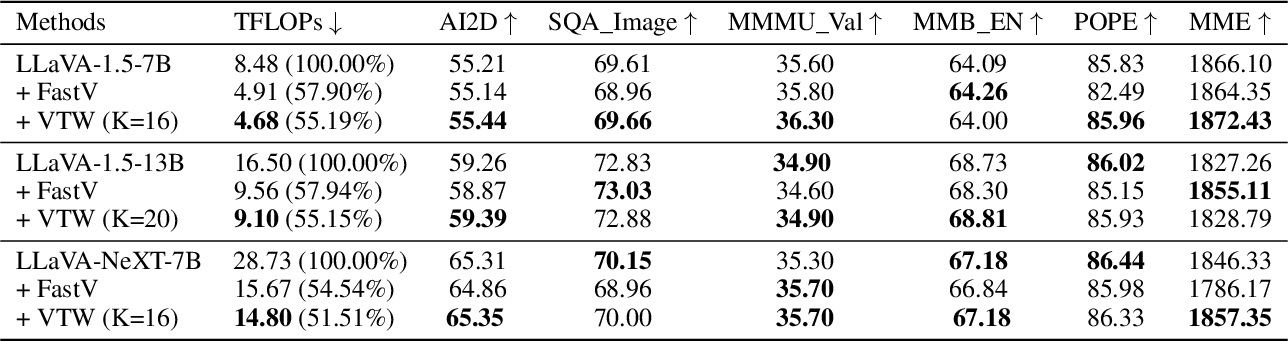

Boosting Multimodal Large Language Models With Visual Tokens Withdrawal For Rapid Inference As science and technology progress, the pervasive adoption of large language models and multi modal languages across diverse domains is poised to proliferate, facilitating the execution of a myriad of distinct tasks. Multimodal large language models (mllms) integrate visual and textual modalities, both as input and output dialogue based interface and instruction following capabilities.

Boosting Multimodal Large Language Models With Visual Tokens Withdrawal For Rapid Inference

Comments are closed.