Figure 2 From Large Language Models As Evaluators For Recommendation Explanations Semantic Scholar

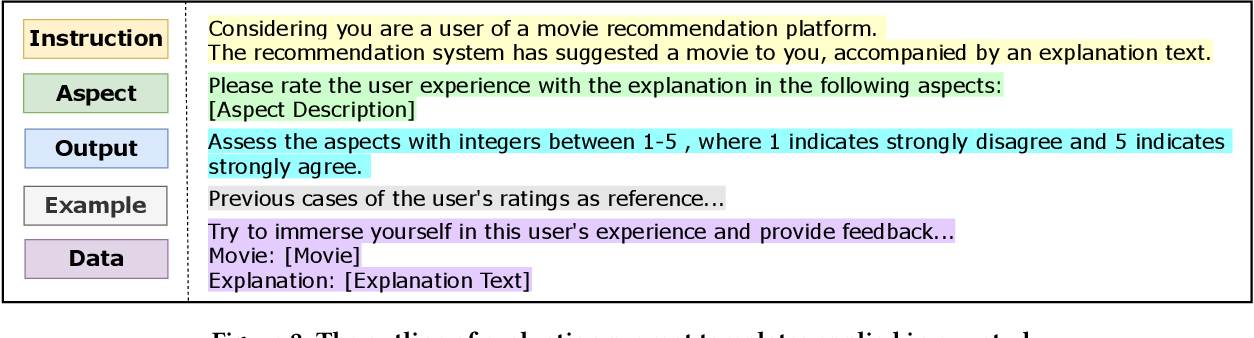

A Survey On Evaluation Of Large Language Models Pdf Artificial Intelligence Intelligence Figure 2: the outline of evaluation prompt templates applied in our study. "large language models as evaluators for recommendation explanations". In this paper, we investigate whether llms can serve as evaluators of recommendation explanations. to answer the question, we utilize real user feedback on explanations given from previous work and additionally collect third party annotations and llm evaluations.

Figure 2 From Large Language Models As Evaluators For Recommendation Explanations Semantic Scholar Bibliographic details on large language models as evaluators for recommendation explanations. In this paper, we investigate whether llms can serve as evaluators of recommendation explanations. to answer the question, we utilize real user feedback on explanations given from previous work and additionally collect third party annotations and llm evaluations. Compared to basic explanations readily available through systems like chat gpt, our methodology delves deeper into the classification of reviews across various dimensions (such as sentiment, emotion, and topics addressed) to produce more comprehensive explanations for user review classifications. A user centric evaluation framework based on large language models (llms) for crss, namely conversational recommendation evaluator (core), which aligns well with human evaluation in most of the 12 factors and the overall assessment. conversational recommender systems (crss) integrate both recommendation and dialogue tasks, making their evaluation uniquely challenging. existing approaches.

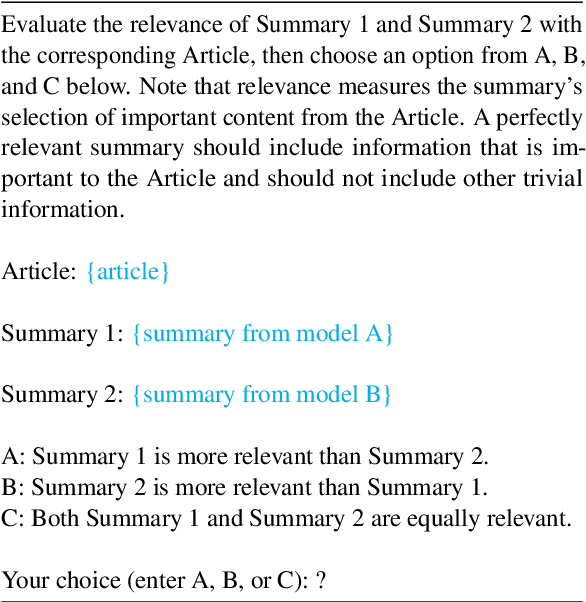

Table 3 From Are Large Language Models Good Evaluators For Abstractive Summarization Semantic Compared to basic explanations readily available through systems like chat gpt, our methodology delves deeper into the classification of reviews across various dimensions (such as sentiment, emotion, and topics addressed) to produce more comprehensive explanations for user review classifications. A user centric evaluation framework based on large language models (llms) for crss, namely conversational recommendation evaluator (core), which aligns well with human evaluation in most of the 12 factors and the overall assessment. conversational recommender systems (crss) integrate both recommendation and dialogue tasks, making their evaluation uniquely challenging. existing approaches. This literature review explores the intersection of recommender systems, explanations, and large language models. Applied to propane dehydrogenation catalysts, our method demonstrated two aspects: first, generating comprehensive reviews from 343 articles spanning 35 topics; and, second, evaluating data mining capabilities by using 1041 articles for experimental catalyst property analysis. To address this research gap and advance the development of user centric conversational recommender systems, this study proposes an auto mated llm based crs evaluation framework, building upon exist ing research in human computer interaction and psychology. Table 1: the 3 level pearson correlation between the results given by the evaluator and the ground truth label given by users. bold fonts denote the best results among all tested evaluators and the underlines show the second best results. "large language models as evaluators for recommendation explanations".

Comments are closed.