Generative Pre Trained Transformer

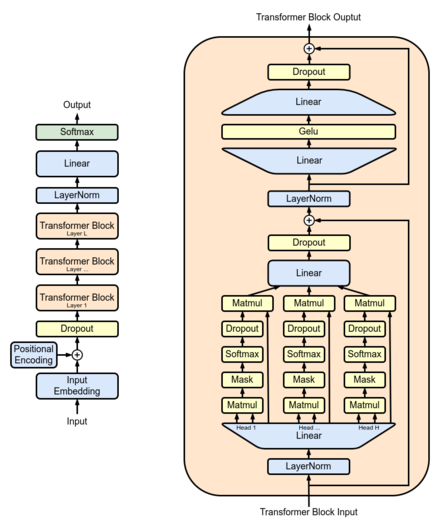

Github Vineethpandu Generative Pre Trained Transformer Generative Pre Trained Transformer The generative pre trained transformer (gpt) is a model, developed by open ai to understand and generate human like text. gpt has revolutionized how machines interact with human language making more meaningful communication possible between humans and computers. Generative pretrained transformers (gpts) are a family of large language models (llms) based on a transformer deep learning architecture. developed by openai, these foundation models power chatgpt and other generative ai applications capable of simulating human created output.

Generative Pre Trained Transformer 3 Pianalytix Build Real World Tech Projects Based on this representation, we introduce the graph generative pre trained transformer (g2pt), an auto regressive model that learns graph structures via next token prediction. Generative pre trained transformers, commonly known as gpt, are a family of neural network models that uses the transformer architecture and is a key advancement in artificial intelligence (ai) powering generative ai applications such as chatgpt. We demonstrate that large gains on these tasks can be realized by generative pre training of a language model on a diverse corpus of unlabeled text, followed by discriminative fine tuning on each specific task. This paper explores the uses of generative pre trained transformers (gpt) for natural language design concept generation. our experiments involve the use of gpt 2 and gpt 3 for different creative reasonings in design tasks.

Generative Pre Trained Transformer We demonstrate that large gains on these tasks can be realized by generative pre training of a language model on a diverse corpus of unlabeled text, followed by discriminative fine tuning on each specific task. This paper explores the uses of generative pre trained transformers (gpt) for natural language design concept generation. our experiments involve the use of gpt 2 and gpt 3 for different creative reasonings in design tasks. The emergence of the generative pre trained transformer (gpt) signifies a substantial advancement in the realm of natural language processing (nlp), pushing us toward the advance of machines that can well understand and communicate in a way very similar to human language. In this work, we present gpdit, a generative pre trained autoregressive diffusion transformer that unifies the strengths of diffusion and autoregressive modeling for long range video synthesis, within a continuous latent space. A very large, transformer based language model trained on a massive dataset. architecture very similar to the decoder only transformer.

Comments are closed.