Gpt 5 Model Architecture

Gpt 5 Model Architecture Gpt 5, the highly anticipated successor from openai, heralds a paradigm shift in artificial intelligence. more than just an incremental update, the new gpt 5 is expected to redefine the boundaries of ai capabilities in reasoning, multimodal interaction, and autonomy. Gpt 5 introduces a unified model architecture, combining reasoning and multimodal capabilities into a single framework for a seamless user experience.

Gpt Model Architecture Gpt‑5 appears to build upon gpt‑4’s core architecture with smart upgrades and a few additions. the breakthroughs may not always be flashy or obvious in a single prompt, but they may unlock new capabilities at the system and agent level over time. Gpt 5 moves away from a monolithic structure and embraces a modular, flexible, and scalable form of intelligence that is better suited to complex, real world tasks. Official timeline: sam altman has said chatgpt’s gpt 5 model release date is marked for some point in the summer 2025.; recent updates: in april 2025, altman announced delays, stating they would “release o3 and o4 mini after all, probably in a couple of weeks, and then do gpt 5 in a few months.”; expected window: most sources point to july 2025 as the likely release month, though this. From our conversation, gpt 5 is being trained on about 25k gpus, mostly a100s, and it takes multiple months; that’s about $225m of nvidia hardware, but importantly this is not the only use, and many of the same gpus were used to train gpt 3 and gpt 4….

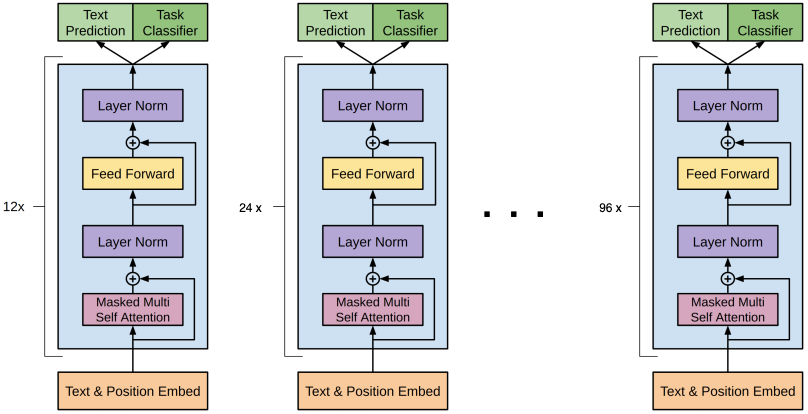

Gpt Model Architecture Official timeline: sam altman has said chatgpt’s gpt 5 model release date is marked for some point in the summer 2025.; recent updates: in april 2025, altman announced delays, stating they would “release o3 and o4 mini after all, probably in a couple of weeks, and then do gpt 5 in a few months.”; expected window: most sources point to july 2025 as the likely release month, though this. From our conversation, gpt 5 is being trained on about 25k gpus, mostly a100s, and it takes multiple months; that’s about $225m of nvidia hardware, but importantly this is not the only use, and many of the same gpus were used to train gpt 3 and gpt 4…. In 2018, openai introduced the concept of generative pre training with gpt 1, using a transformer architecture to enhance natural language understanding. this model, detailed in their paper "improving language understanding by generative pre training," served as a proof of concept and was not publicly released. Gpt 5, openai’s anticipated next generation language model expected in mid 2025, represents a paradigm shift toward artificial general intelligence (agi). At the heart of gpt 5 is the transformer architecture, known for its efficiency in handling sequential data such as text. this architecture has been a cornerstone of the gpt series, enabling the models to understand and generate human like text with remarkable accuracy. Firstly, the size of gpt 5 is noteworthy. it’s approximately 10 times larger than its predecessor, gpt 3, boasting around 1.8 trillion parameters across 120 layers of deep neural networks. in the realm of deep learning, the number of parameters significantly influences a model’s capabilities.

Comments are closed.