How Does Gpu Acceleration Work With Cloud Computing Next Lvl Programming

Rent And Reserve Best Cloud Gpu For High Performance Computing In this informative video, we’ll explore the fascinating world of gpu acceleration in cloud computing. you’ll learn how graphics processing units are utilized to enhance performance for. How does accelerated computing work? accelerated computing uses a combination of hardware, software and networking technologies to help modern enterprises power their most advanced applications. hardware components that are critical to accelerators include gpus, asics and fpgas.

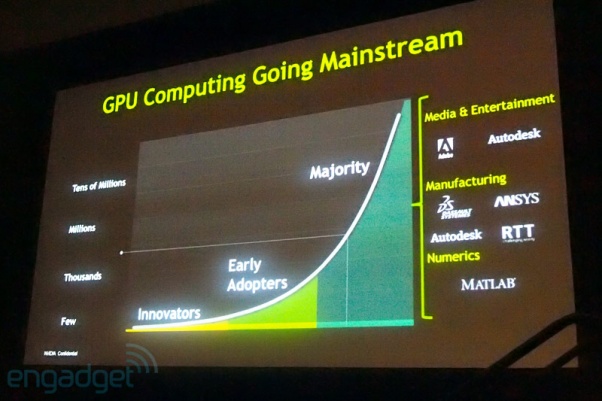

Gpu Cloud Computing What Cios Need To Know In this paper we provide an analysis of gpu performance improvements, memory management techniques, and cloud service scalability, offering insights into the potential of gpu acceleration in cloud computing infrastructures. With the unified nvidia accelerated computing platform available on any cloud, developers gain access to a rich ecosystem that provides consistent performance and scalability, enabling them to develop and deploy applications anywhere. To harness gpu power, developers use apis like cuda (for nvidia gpus) or opencl (for various gpu architectures). these apis simplify programming tasks, enabling applications to leverage gpu processing power without complex hardware level integration. Accelerated computing utilizes application programming interfaces (apis) and programming models such as cuda and opencl to interface with both software and hardware accelerators. this optimizes data flow for better performance, power efficiency, cost effectiveness, and accuracy.

Rent And Reserve Cloud Gpu For Llm Hyperstack To harness gpu power, developers use apis like cuda (for nvidia gpus) or opencl (for various gpu architectures). these apis simplify programming tasks, enabling applications to leverage gpu processing power without complex hardware level integration. Accelerated computing utilizes application programming interfaces (apis) and programming models such as cuda and opencl to interface with both software and hardware accelerators. this optimizes data flow for better performance, power efficiency, cost effectiveness, and accuracy. Accelerators are used in cloud computing servers, including tensor processing units (tpu) in google cloud platform [14] and trainium and inferentia chips in amazon web services. [15] many vendor specific terms exist for devices in this category, and it is an emerging technology without a dominant design graphics processing units designed by companies such as nvidia and amd often include ai. Gpu acceleration works by transferring specific computing tasks from the cpu to the gpu. tasks that can be parallelized are delivered to the gpu, allowing it to use its multiple cores for simultaneous processing. To leverage parallelism effectively, programmers utilize parallel programming models, such as cuda (compute unified device architecture) for nvidia gpus or opencl (open computing language) compatible with various gpu vendors. 3.3 challenges in cloud llm deployments lack of server level control knobs. on cpu servers, fea tures like ipmi [26] and rapl [55] provide a fast and reliable way to control full server power by setting a single cap on the cpu. however, there are no unified power controls avail able for gpu servers. cloud operators must separately tweak.

Rent And Reserve Cloud Gpu For Rendering Hyperstack Accelerators are used in cloud computing servers, including tensor processing units (tpu) in google cloud platform [14] and trainium and inferentia chips in amazon web services. [15] many vendor specific terms exist for devices in this category, and it is an emerging technology without a dominant design graphics processing units designed by companies such as nvidia and amd often include ai. Gpu acceleration works by transferring specific computing tasks from the cpu to the gpu. tasks that can be parallelized are delivered to the gpu, allowing it to use its multiple cores for simultaneous processing. To leverage parallelism effectively, programmers utilize parallel programming models, such as cuda (compute unified device architecture) for nvidia gpus or opencl (open computing language) compatible with various gpu vendors. 3.3 challenges in cloud llm deployments lack of server level control knobs. on cpu servers, fea tures like ipmi [26] and rapl [55] provide a fast and reliable way to control full server power by setting a single cap on the cpu. however, there are no unified power controls avail able for gpu servers. cloud operators must separately tweak.

Rent And Reserve Best Cloud Gpu For Ai Hyperstack To leverage parallelism effectively, programmers utilize parallel programming models, such as cuda (compute unified device architecture) for nvidia gpus or opencl (open computing language) compatible with various gpu vendors. 3.3 challenges in cloud llm deployments lack of server level control knobs. on cpu servers, fea tures like ipmi [26] and rapl [55] provide a fast and reliable way to control full server power by setting a single cap on the cpu. however, there are no unified power controls avail able for gpu servers. cloud operators must separately tweak.

Rent And Reserve Best Cloud Gpu For Ml Hyperstack

Comments are closed.