How To Evaluate Your Llm Applications Uptrain Ai

How To Evaluate Your Llm Applications Uptrain Ai Evaluating llm generated responses varies by application. for marketing messages, we consider creativity, brand tone, appropriateness, and conciseness. customer support chatbots must be assessed for hallucinations, politeness, and response completeness. code generation apps focus on syntax accuracy, complexity, and code quality. Enterprise grade tooling to easily evaluate, experiment, monitor, and test llm applications; host on your own secure cloud environment.

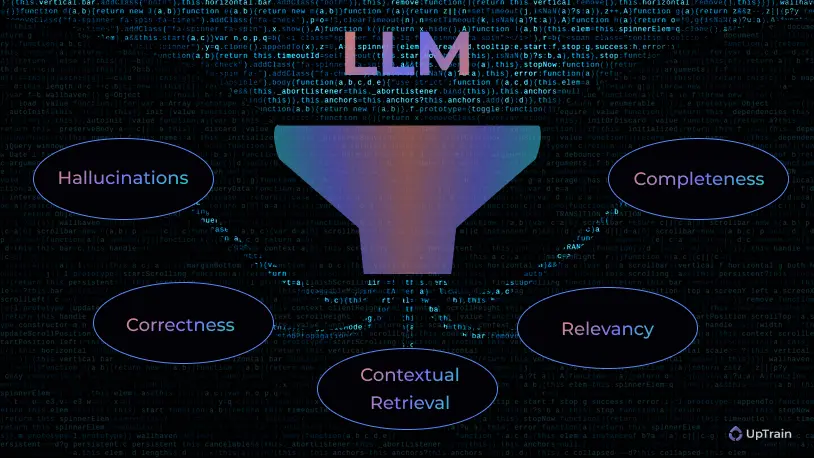

Why Llm Uptrain Ai Uptrain dashboard is a web based interface that runs on your local machine. you can use the dashboard to evaluate your llm applications, view the results, and perform a root cause analysis. support for 20 pre configured evaluations such as response completeness, factual accuracy, context conciseness etc. Evaluating llms through an ethical lens guarantees that they operate transparently, uphold fairness and reduce the potential for harm, promote trust among users and ensuring ethical ai development. real world applications of llm evaluation 1. customer support. llms are increasingly used to automate customer support by quickly answering customer. Uptrain provides a range of pre configured evaluations (evals) and helpful operators for creating custom checks. with these tools, you can assess aspects like the relevance of context,. Evaluating llm generated responses varies by application. for marketing messages, we consider creativity, brand tone, appropriateness, and conciseness. customer support chatbots must be assessed for hallucinations, politeness, and response completeness. code generation apps focus on syntax accuracy, complexity, and code quality.

Detecting Prompt Leak In Llm Applications Uptrain Ai Uptrain provides a range of pre configured evaluations (evals) and helpful operators for creating custom checks. with these tools, you can assess aspects like the relevance of context,. Evaluating llm generated responses varies by application. for marketing messages, we consider creativity, brand tone, appropriateness, and conciseness. customer support chatbots must be assessed for hallucinations, politeness, and response completeness. code generation apps focus on syntax accuracy, complexity, and code quality. Uptrain’s 21 evaluation metrics. let’s explore other powerful evaluation metrics from uptrain that help assess different aspects of llm responses. these metrics cover everything from factual accuracy to conversation satisfaction, helping ensure high quality ai interactions. However, it’s crucial to grasp why assessing your llm application’s performance matters. you might wonder, “is this step really necessary, or can the model operate without it?” in reality, llm evaluations are the linchpin to unlocking your language model application’s full potential. What is uptrain dashboard? the uptrain dashboard is a web based interface that allows you to evaluate your llm applications. it is a self hosted dashboard that runs on your local machine. you don’t need to write any code to use the dashboard. So in this post, i’ll walk you through how you can systematically evaluate your llm applications. in the following sections, we’ll delve into the nuances of llm evaluation, exploring.

Llm Ai Applications And Use Cases Tars Blog Uptrain’s 21 evaluation metrics. let’s explore other powerful evaluation metrics from uptrain that help assess different aspects of llm responses. these metrics cover everything from factual accuracy to conversation satisfaction, helping ensure high quality ai interactions. However, it’s crucial to grasp why assessing your llm application’s performance matters. you might wonder, “is this step really necessary, or can the model operate without it?” in reality, llm evaluations are the linchpin to unlocking your language model application’s full potential. What is uptrain dashboard? the uptrain dashboard is a web based interface that allows you to evaluate your llm applications. it is a self hosted dashboard that runs on your local machine. you don’t need to write any code to use the dashboard. So in this post, i’ll walk you through how you can systematically evaluate your llm applications. in the following sections, we’ll delve into the nuances of llm evaluation, exploring.

Comments are closed.