Lets Build Gpt From Scratch In Code Spelled Out

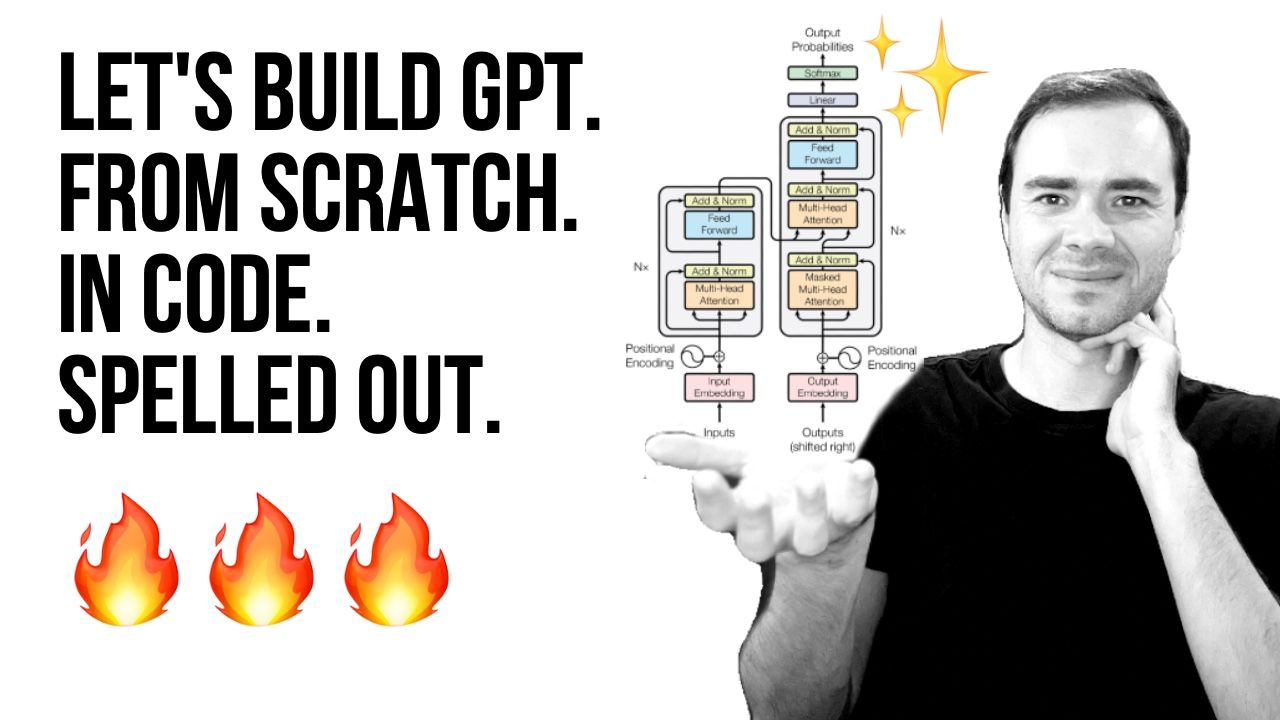

Let S Build Gpt From Scratch In Code Spelled Out We build a generatively pretrained transformer (gpt), following the paper "attention is all you need" and openai's gpt 2 gpt 3. we talk about connections t. Let's build gpt: from scratch, in code, spelled out. link to source material: youtu.be kcc8fmeb1ny github: github karpathy ng video lecture.

Let S Build Gpt From Scratch In Code Spelled Out Let's build gpt: from scratch, in code, spelled out. a step by step implementation of a generatively pretrained transformer (gpt) model that demonstrates the core architecture behind systems like chatgpt. Demonstrations reveal how character level processing differs from token level approaches like chatgpt, with a focus on a simple implementation for training transformers. the code for this project, found in the github repository 'nanog gpt,' illustrates how to build a transformer from scratch, leveraging basic python, calculus, and statistics. This project is heavily inspired by andrej karpathy’s fantastic work, where he walks through building a gpt model in code, step by step. his ability to break down complex topics into intuitive explanations is incredible, and i highly recommend watching his video for a deeper understanding. 小型化和效率化:nanogpt 是一种小型的 gpt 模型,具有更少的参数,这使得它在资源受限的环境中更加实用。 了解 llm 的实现原理,掌握 pytorch 和 transformers 的使用。.

Let S Build Gpt From Scratch In Code Spelled Out This project is heavily inspired by andrej karpathy’s fantastic work, where he walks through building a gpt model in code, step by step. his ability to break down complex topics into intuitive explanations is incredible, and i highly recommend watching his video for a deeper understanding. 小型化和效率化:nanogpt 是一种小型的 gpt 模型,具有更少的参数,这使得它在资源受限的环境中更加实用。 了解 llm 的实现原理,掌握 pytorch 和 transformers 的使用。. 《let's build gpt:from scratch, in code, spelled out.》 是 [andrej karpathy] 大佬录制的课程,该课程约 2 小时,从头构建出一个可工作的 gpt,talk is cheap,show me the code,相当硬核。. A step by step implementation of a generatively pretrained transformer (gpt) model that demonstrates the core architecture behind systems like chatgpt. Gpt (generative pretrained tansformer) transformers do the heavy lifting under the hood. the subset of data being used is a subset of shakespear. the simplest, fastest repository for training finetuning medium sized gpts. it is a rewrite of mingpt that prioritizes teeth over education. 4. follow the 3 step recipe of openai blog chatgpt to finetune the model to be an actual assistant instead of just "document completor", which otherwise happily e.g. responds to questions with more questions.

Let S Build Gpt From Scratch In Code Spelled Out 《let's build gpt:from scratch, in code, spelled out.》 是 [andrej karpathy] 大佬录制的课程,该课程约 2 小时,从头构建出一个可工作的 gpt,talk is cheap,show me the code,相当硬核。. A step by step implementation of a generatively pretrained transformer (gpt) model that demonstrates the core architecture behind systems like chatgpt. Gpt (generative pretrained tansformer) transformers do the heavy lifting under the hood. the subset of data being used is a subset of shakespear. the simplest, fastest repository for training finetuning medium sized gpts. it is a rewrite of mingpt that prioritizes teeth over education. 4. follow the 3 step recipe of openai blog chatgpt to finetune the model to be an actual assistant instead of just "document completor", which otherwise happily e.g. responds to questions with more questions.

Let S Build Gpt From Scratch In Code Spelled Out Gpt (generative pretrained tansformer) transformers do the heavy lifting under the hood. the subset of data being used is a subset of shakespear. the simplest, fastest repository for training finetuning medium sized gpts. it is a rewrite of mingpt that prioritizes teeth over education. 4. follow the 3 step recipe of openai blog chatgpt to finetune the model to be an actual assistant instead of just "document completor", which otherwise happily e.g. responds to questions with more questions.

Let S Build Gpt From Scratch In Code Spelled Out

Comments are closed.