Llm Inference Performance Benchmarking Part 1

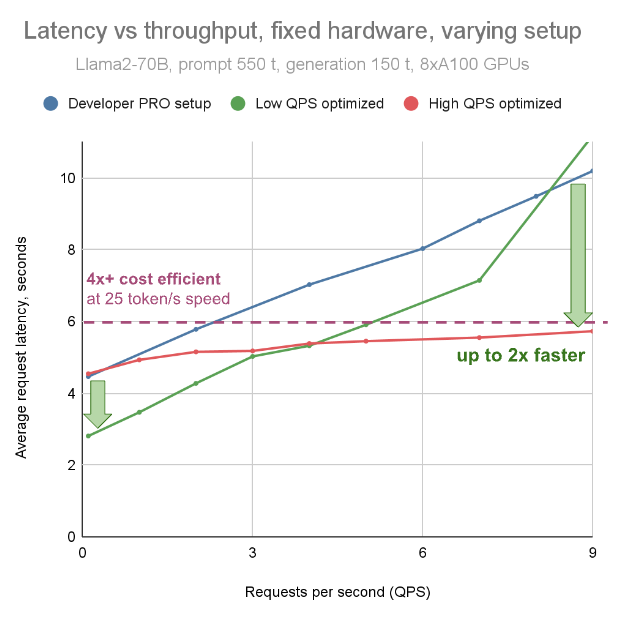

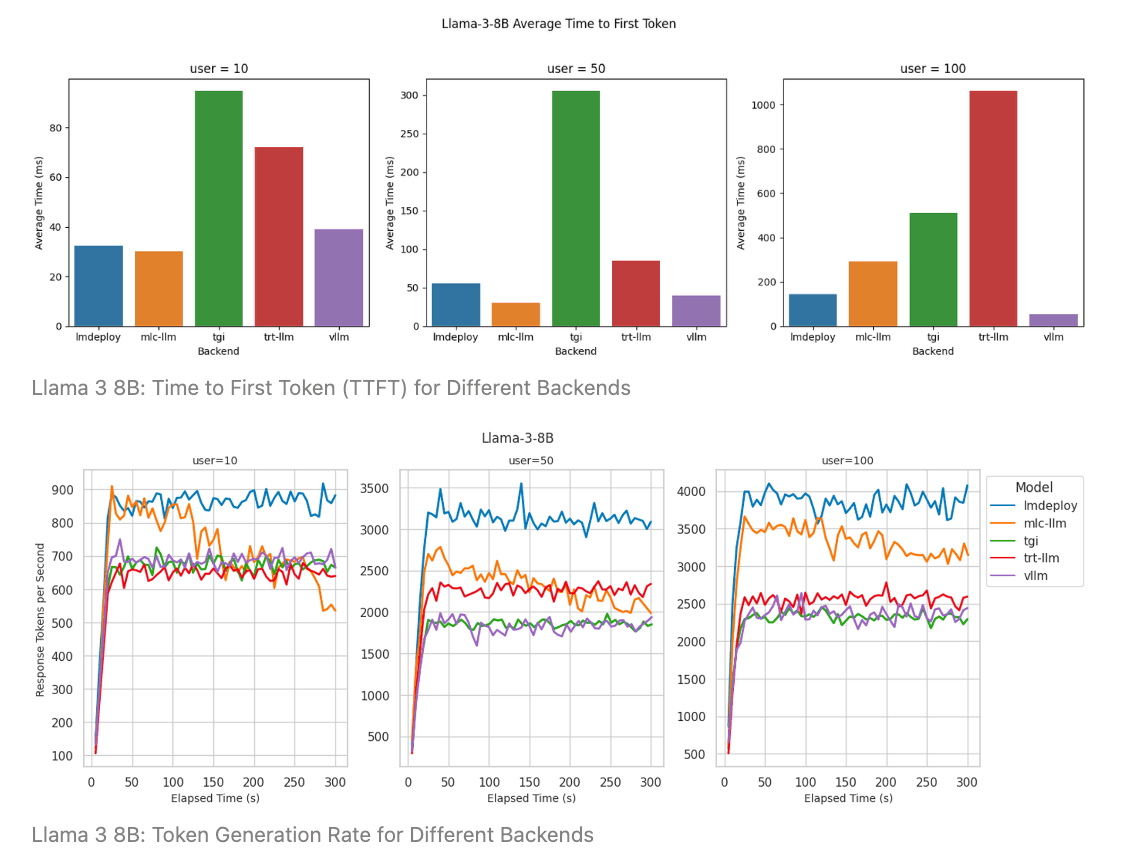

Llm Inference Performance Benchmarking Part 1 We're releasing the benchmark suite we've been using at fireworks to evaluate described performance tradeoffs. we hope to contribute to a rich ecosystem of knowledge and tools (e.g. those published by databricks and anyscale ) that help customers optimize llms for their use cases. Benchmarking the performance of llms across diverse hardware platforms is crucial to understanding their scalability and throughput characteristics. we introduce llm inference bench, a comprehensive benchmarking suite to evaluate the hardware inference performance of llms.

Llm Inference Endpoint Performance Benchmarking Tool Bens Bites We also give a step by step guide on using our preferred tool (genai perf) to benchmark your llm applications. it is worth noting that performance benchmarking and load testing are two distinct approaches to evaluating the deployment of a large language model. See llm inference benchmarking guide: nvidia genai perf and nim for tips on using genai perf and nvidia nim for your applications. introduction . using this information, rule out the unqualified part of the performance chart (in this example, any data point on the right of the 250 ms line). of the remaining data points that satisfy the. Llm inference bench, a comprehensive benchmarking suite designed to provide detailed performance evaluations of llms across multiple ai accelerators, contributing to the broader understanding of llm performance optimization and hard ware selection in the rapidly evolving field of ai acceleration. How to optimize llm inference performance. in this article, we will be specifically talking about llm inference optimization techniques.

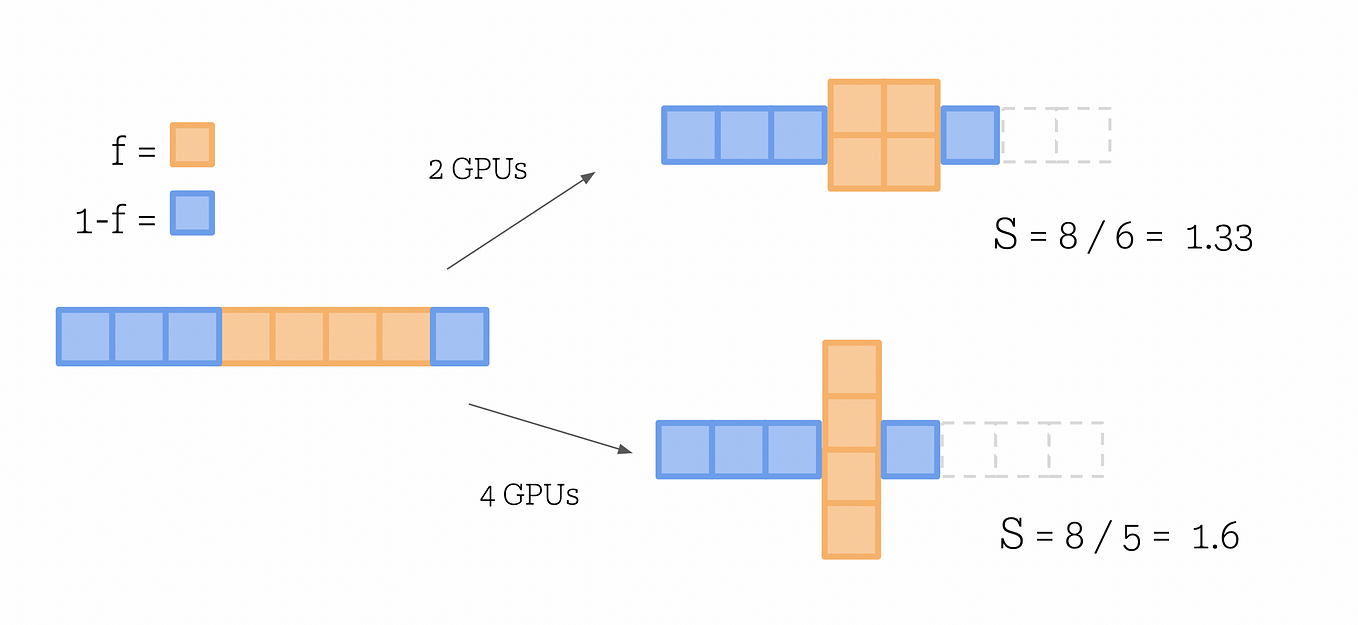

Llm Inference Performance Benchmarking Part 1 By Fireworks Ai Medium Llm inference bench, a comprehensive benchmarking suite designed to provide detailed performance evaluations of llms across multiple ai accelerators, contributing to the broader understanding of llm performance optimization and hard ware selection in the rapidly evolving field of ai acceleration. How to optimize llm inference performance. in this article, we will be specifically talking about llm inference optimization techniques. Learn best practices for optimizing llm inference performance on databricks, enhancing the efficiency of your machine learning models. This is a cheat sheet for running a simple benchmark on consumer hardware for llm inference using the most popular end user inferencing engine, llama.cpp and its included llama bench. feel free to skip to the howto section if you want. Load testing and performance benchmarking are two distinct approaches to evaluating the deployment of an llm. load testing focuses on simulating a large number of concurrent requests to a model to assess its ability to handle real world traffic at scale. As ai continues to reshape industries, the performance of inference—the process of generating outputs from trained models—has become just as critical as model training itself.

Benchmarking Llm Inference Backends Learn best practices for optimizing llm inference performance on databricks, enhancing the efficiency of your machine learning models. This is a cheat sheet for running a simple benchmark on consumer hardware for llm inference using the most popular end user inferencing engine, llama.cpp and its included llama bench. feel free to skip to the howto section if you want. Load testing and performance benchmarking are two distinct approaches to evaluating the deployment of an llm. load testing focuses on simulating a large number of concurrent requests to a model to assess its ability to handle real world traffic at scale. As ai continues to reshape industries, the performance of inference—the process of generating outputs from trained models—has become just as critical as model training itself.

Llm Inference Pypi Load testing and performance benchmarking are two distinct approaches to evaluating the deployment of an llm. load testing focuses on simulating a large number of concurrent requests to a model to assess its ability to handle real world traffic at scale. As ai continues to reshape industries, the performance of inference—the process of generating outputs from trained models—has become just as critical as model training itself.

Llm Inference Benchmarking On Ai Accelerators Stable Diffusion Online

Comments are closed.