Measuring Llm Metrics

Llm Evaluation Metrics For Labeled Data Bens Bites To thoroughly evaluate an llm system, creating an evaluation dataset, also known as ground truth or golden datasets, for each component becomes paramount. however, this approach comes with. However, llm applications are a recent and fast evolving ml field, where model evaluation is not straightforward and there is no unified approach to measure llm performance. several metrics have been proposed in the literature for evaluating the performance of llms.

Metrics Nvidia Docs Evaluating llms requires a comprehensive approach, employing a range of measures to assess various aspects of their performance. in this discussion, we explore key evaluation criteria for llms, including accuracy and performance, bias and fairness, as well as other important metrics. In this article, we are sharing the standard set of metrics that are leveraged by the teams, focusing on estimating costs, assessing customer risk and quantifying the added user value. these metrics can be directly computed for any feature that uses openai models and logs their api response. Metrics for evaluating llms can be highly product specific. this article will help you understand how to define the right metrics for your use case while also covering some standard metrics. In this article, i'll walkthrough everything you need to know about llm evaluation metrics, with code samples.

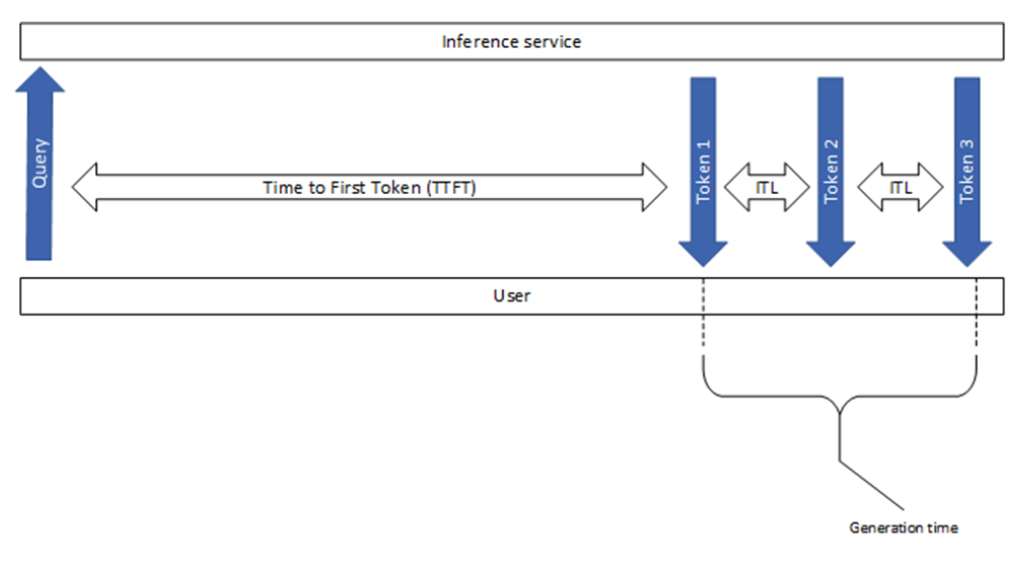

Llm Evaluation Metrics For Reliable And Optimized Ai Outputs Metrics for evaluating llms can be highly product specific. this article will help you understand how to define the right metrics for your use case while also covering some standard metrics. In this article, i'll walkthrough everything you need to know about llm evaluation metrics, with code samples. Understanding llm evaluation metrics is crucial for maximizing the potential of large language models. llm evaluation metrics help measure a model’s accuracy, relevance, and overall effectiveness using various benchmarks and criteria. Llm evaluation metrics range from using llm judges for custom criteria to ranking metrics and semantic similarity. this guide covers key methods for llm evaluation and benchmarking. Measure key metrics like latency and throughput to optimize llm inference performance. The trained or fine tuned llm models are evaluated on the benchmark tasks using the predefined evaluation metrics. the models’ performance is measured based on their ability to generate accurate, coherent, and contextually appropriate responses for each task.

Llm Evaluation Metrics And Methods Understanding llm evaluation metrics is crucial for maximizing the potential of large language models. llm evaluation metrics help measure a model’s accuracy, relevance, and overall effectiveness using various benchmarks and criteria. Llm evaluation metrics range from using llm judges for custom criteria to ranking metrics and semantic similarity. this guide covers key methods for llm evaluation and benchmarking. Measure key metrics like latency and throughput to optimize llm inference performance. The trained or fine tuned llm models are evaluated on the benchmark tasks using the predefined evaluation metrics. the models’ performance is measured based on their ability to generate accurate, coherent, and contextually appropriate responses for each task.

Comments are closed.