Metrics Nvidia Docs

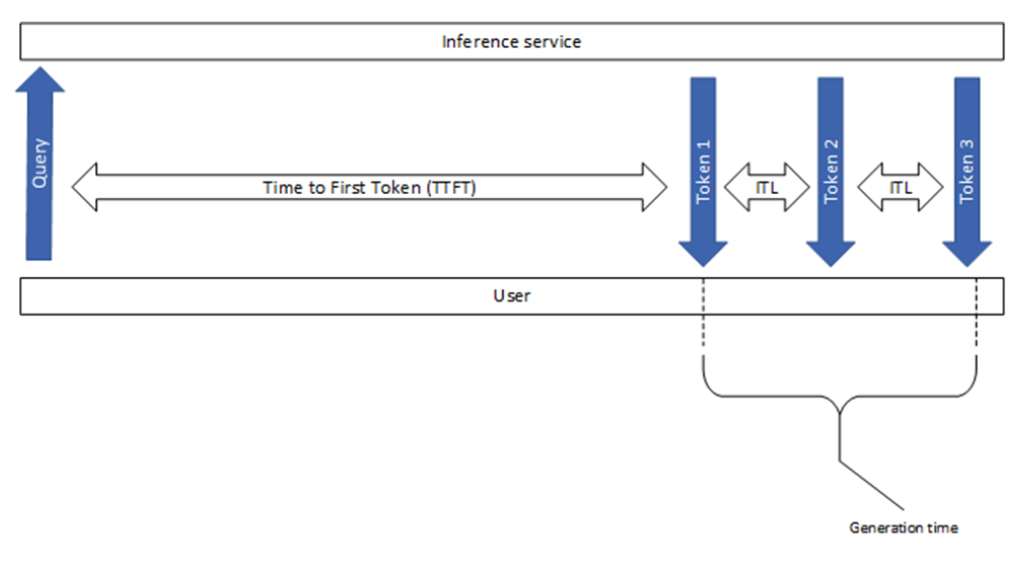

Metrics Nvidia Docs Overview of popular llm inference performance metrics. this metric shows how long a user needs to wait before seeing the model’s output. this is the time it takes from submitting the query to receiving the first token (if the response is not empty). Metrics are numeric measurements recorded over time that are emitted from the nvidia run:ai cluster and telemetry is a numeric measurement recorded in real time when emitted from the nvidia run:ai cluster.

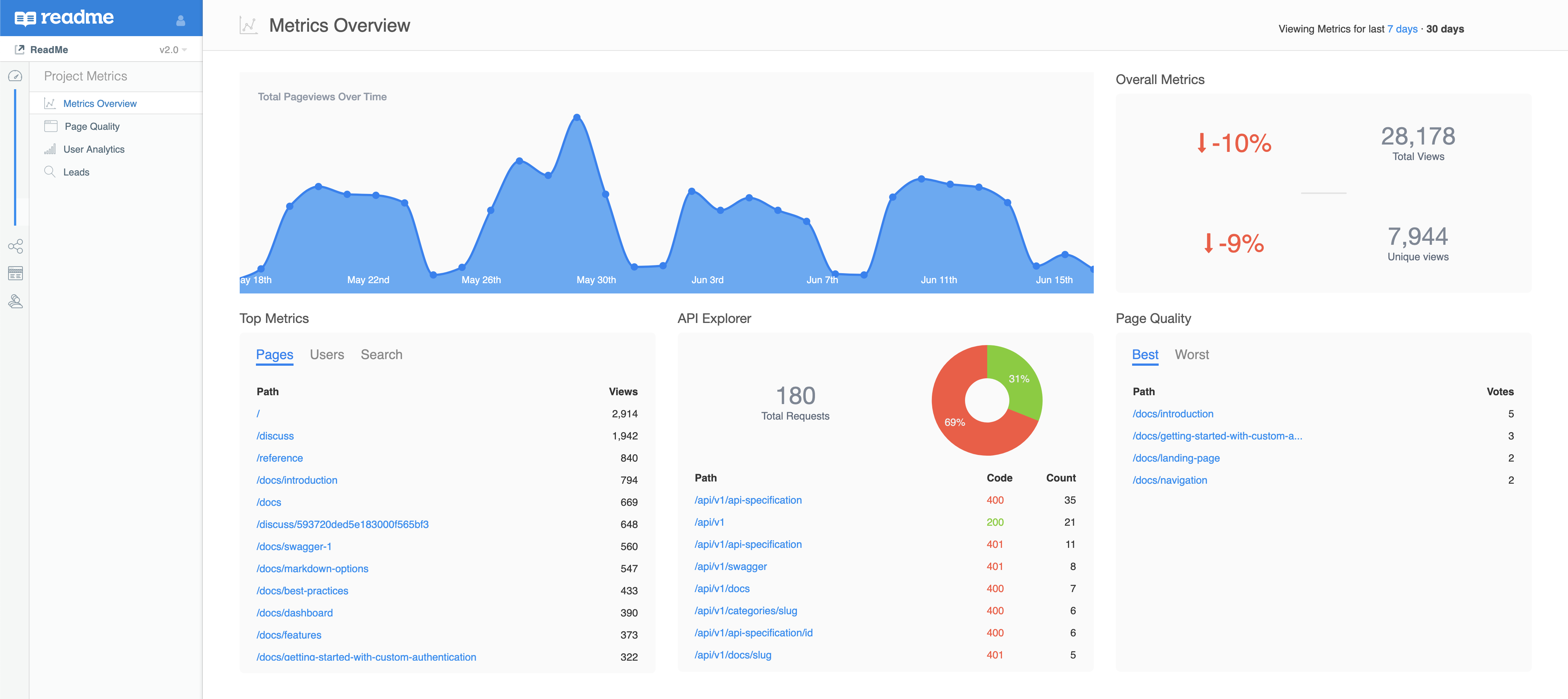

Nvidia Documentation Hub Nvidia Docs Rubric score: the rubric based criteria scoring metric allows evaluations based on user defined rubrics, where each rubric outlines specific scoring criteria. the llm assesses responses according to these customized descriptions, ensuring a consistent and objective evaluation process. The metrics facility allows the collecting of user defined metrics and allows for them to be scraped or pushed to a metrics stack. by default prometheus based metrics are used exposing metric types such as gauges, counters, histograms etc. By default, these metrics are available at localhost:8002 metrics. the metrics are only available by accessing the endpoint, and are not pushed or published to any remote server. the metric format is plain text so you can view them directly, for example:. Nvidia parabricks accelerated tools for measuring bam quality indices.

Documentation Metrics By default, these metrics are available at localhost:8002 metrics. the metrics are only available by accessing the endpoint, and are not pushed or published to any remote server. the metric format is plain text so you can view them directly, for example:. Nvidia parabricks accelerated tools for measuring bam quality indices. In a vdi environment, performance metrics are categorized into two tiers: server level and vm level. each tier has distinct metrics that must be validated to ensure optimal performance and scalability. For more information and expert guidance on how to select the right metrics and optimize your ai application’s performance, see the llm inference sizing: benchmarking end to end inference systems gtc session. It provides a standardized way for kit extensions and carb plugins to emit, collect, and export metrics data using the opentelemetry standard. To connect nvidia run:ai to grafana labs (hosted prometheus), you need a grafana cloud access policy token. this token authenticates api requests and enables secure access to your metrics data. create an access token following the create access policies and tokens – grafana cloud docs.

Metrics Nvidia Triton Inference Server In a vdi environment, performance metrics are categorized into two tiers: server level and vm level. each tier has distinct metrics that must be validated to ensure optimal performance and scalability. For more information and expert guidance on how to select the right metrics and optimize your ai application’s performance, see the llm inference sizing: benchmarking end to end inference systems gtc session. It provides a standardized way for kit extensions and carb plugins to emit, collect, and export metrics data using the opentelemetry standard. To connect nvidia run:ai to grafana labs (hosted prometheus), you need a grafana cloud access policy token. this token authenticates api requests and enables secure access to your metrics data. create an access token following the create access policies and tokens – grafana cloud docs.

Comments are closed.