Metrics On The Cloud Deepeval The Open Source Llm Evaluation Framework

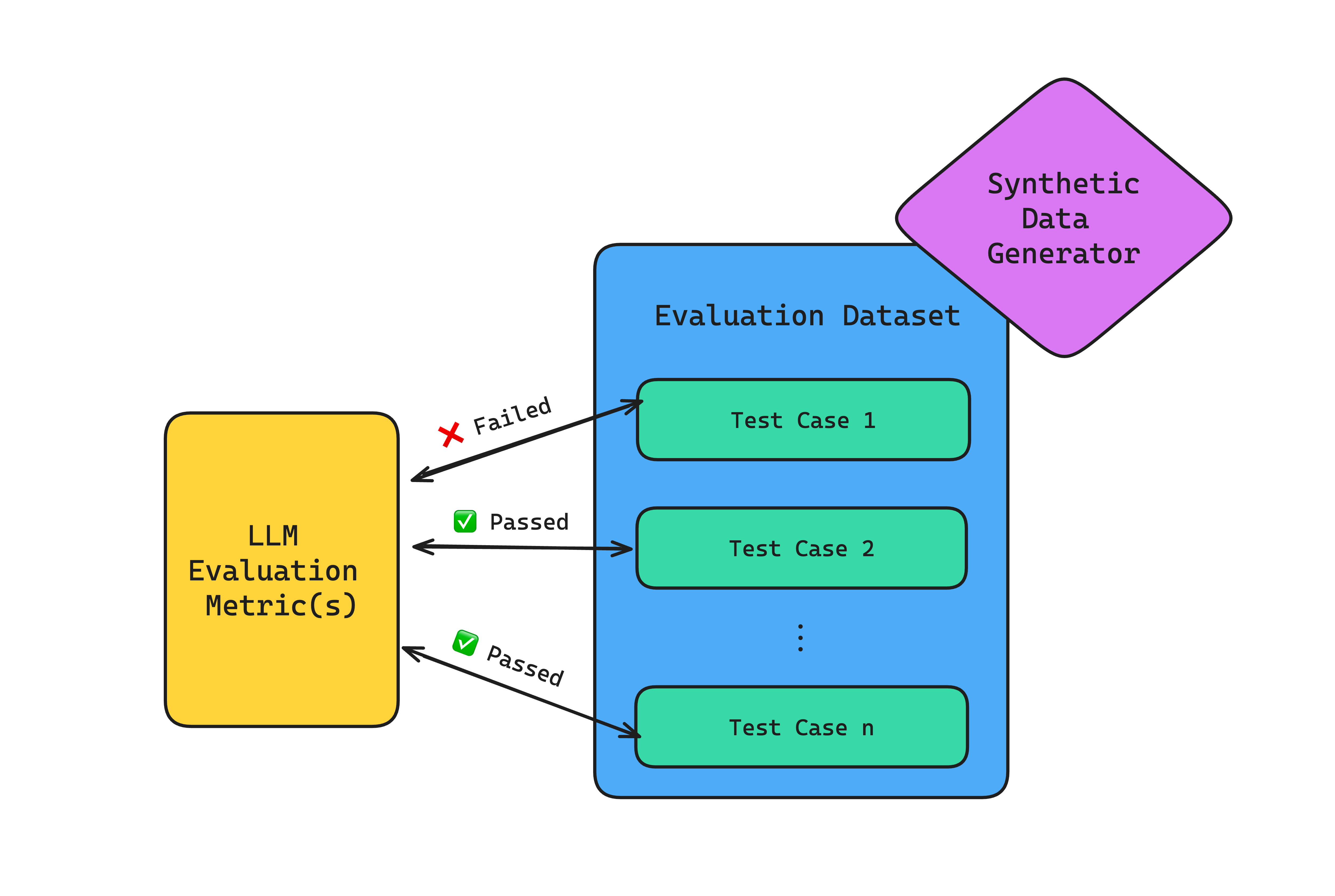

Deepeval Open Source Llm Evaluation Framework U Stephen Leo In deepeval, a metric serves as a standard of measurement for evaluating the performance of an llm output based on a specific criteria of interest. essentially, while the metric acts as the ruler, a test case represents the thing you're trying to measure. Deepeval incorporates the latest research to evaluate llm outputs based on metrics such as g eval, hallucination, answer relevancy, ragas, etc., which uses llms and various other nlp models that runs locally on your machine for evaluation.

How To Build Your Own Llm Evaluation Framework Ai News Club Latest Artificial Intelligence With the increasing prevalence of language learning models (llms) like openai’s gpt 4 and google’s gemini, evaluating their responses becomes crucial to ensure quality, relevance, and safety. the. Offers 14 llm evaluation metrics (both for rag and fine tuning use cases), updated with the latest research in the llm evaluation field. these metrics include: most metrics are self explaining, which means deepeval's metrics will literally tell you why the metric score cannot be higher. Learn to use deepeval to create pytest like relevance tests, evaluate llm outputs with the g eval metric, and benchmark qwen 2.5 using mmlu. Having built one of the most adopted llm evaluation framework myself, this article will teach you everything you need to know about llm evaluation metrics, with code samples included. ready for the long list? let’s begin. (update: if you're looking for metrics to evaluate multi turn llm conversations, check out this new article) tl;dr.

How I Built Deterministic Llm Evaluation Metrics For Deepeval Confident Ai Learn to use deepeval to create pytest like relevance tests, evaluate llm outputs with the g eval metric, and benchmark qwen 2.5 using mmlu. Having built one of the most adopted llm evaluation framework myself, this article will teach you everything you need to know about llm evaluation metrics, with code samples included. ready for the long list? let’s begin. (update: if you're looking for metrics to evaluate multi turn llm conversations, check out this new article) tl;dr. Deepeval is an open source evaluation framework designed to assess large language model (llm) performance. it provides a comprehensive suite of metrics and features, including the ability to generate synthetic datasets, perform real time evaluations, and integrate seamlessly with testing frameworks like pytest. Deepeval is a simple to use, open source llm evaluation framework, for evaluating and testing large language model systems. in the previous article, we discussed the implementation of common llm metrics evaluation using ragas. You can either run evaluations locally using deepeval, or on the cloud on a collection of metrics (which is also powered by deepeval). most of the time, running evaluations locally is preferred because it allows for greater flexibility in metric customization. Deepeval is particularly powerful for rag architectures, allowing you to evaluate the quality of retrieved context and the faithfulness of the generated answers. justifying llm choices provide data driven evidence for why you chose a particular llm or configuration based on its performance against your evaluation metrics. confident ai deepeval.

Llm Evaluation Metrics For Reliable And Optimized Ai Outputs Deepeval is an open source evaluation framework designed to assess large language model (llm) performance. it provides a comprehensive suite of metrics and features, including the ability to generate synthetic datasets, perform real time evaluations, and integrate seamlessly with testing frameworks like pytest. Deepeval is a simple to use, open source llm evaluation framework, for evaluating and testing large language model systems. in the previous article, we discussed the implementation of common llm metrics evaluation using ragas. You can either run evaluations locally using deepeval, or on the cloud on a collection of metrics (which is also powered by deepeval). most of the time, running evaluations locally is preferred because it allows for greater flexibility in metric customization. Deepeval is particularly powerful for rag architectures, allowing you to evaluate the quality of retrieved context and the faithfulness of the generated answers. justifying llm choices provide data driven evidence for why you chose a particular llm or configuration based on its performance against your evaluation metrics. confident ai deepeval.

Summarization Deepeval The Open Source Llm Evaluation Framework You can either run evaluations locally using deepeval, or on the cloud on a collection of metrics (which is also powered by deepeval). most of the time, running evaluations locally is preferred because it allows for greater flexibility in metric customization. Deepeval is particularly powerful for rag architectures, allowing you to evaluate the quality of retrieved context and the faithfulness of the generated answers. justifying llm choices provide data driven evidence for why you chose a particular llm or configuration based on its performance against your evaluation metrics. confident ai deepeval.

How I Built Deterministic Llm Evaluation Metrics For Deepeval Confident Ai

Comments are closed.