Pdf Benchmarking Causal Study To Interpret Large Language Models For Source Code

Benchmarking Large Language Models In Retrieval Augmented Generation Pdf Computing Human In an effort to bring statistical rigor to the evaluation of llms, this paper introduces a benchmarking strategy named galeras comprised of curated testbeds for three se tasks (i.e., code completion, code summarization, and commit generation) to help aid the interpretation of llms’ performance. In an effort to bring statistical rigor to the evaluation of llms, this paper introduces a benchmarking strategy named galeras comprised of curated testbeds for three se tasks (i.e., code.

Pdf Benchmarking Causal Study To Interpret Large Language Models For Source Code The benchmark provides a dedicated dataset tailored for test generation purposes, enabling a comprehensive assessment and interpretation of code generation performance. “ how propense are large language models at producing code smells? a benchmarking study ”, in proceedings of the 47th ieee acm international conference on software engineering (icse’25), new ideas and emerging results track, ottawa, ontario, canada, april 27 may 3, 2025 (26% acceptance rate) [pdf]. One of the most common solutions adopted by software researchers to address code generation is by training large language models (llms) on massive amounts of so. In an effort to bring statistical rigor to the evaluation of llms, this paper introduces a benchmarking strategy named galeras comprised of curated testbeds for three se tasks (i.e., code completion, code summarization, and commit generation) to help aid the interpretation of llms' performance.

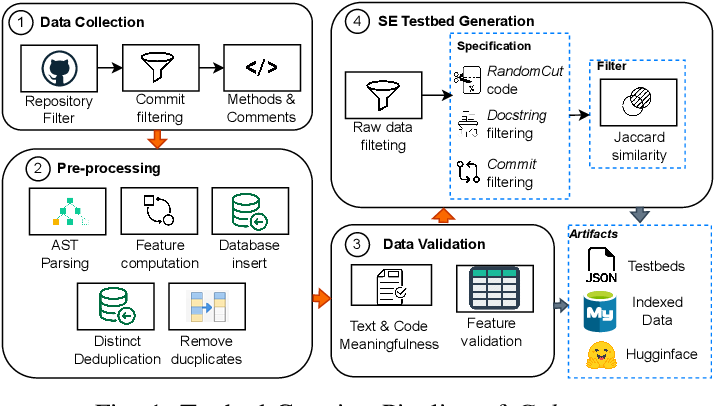

Figure 1 From Benchmarking Causal Study To Interpret Large Language Models For Source Code One of the most common solutions adopted by software researchers to address code generation is by training large language models (llms) on massive amounts of so. In an effort to bring statistical rigor to the evaluation of llms, this paper introduces a benchmarking strategy named galeras comprised of curated testbeds for three se tasks (i.e., code completion, code summarization, and commit generation) to help aid the interpretation of llms' performance. Numerous benchmarks aim to evaluate the capabilities of large language models (llms) for causal inference and rea soning. however, many of them can likely be solved through the retrieval of domain knowledge, questioning whether they achieve their purpose. While code generation has been widely used in various software development scenarios, the quality of the generated code is not guaranteed. this has been a particular concern in the era of large language models (llm) based code generation, where llms, deemed a complex and powerful black box model, are instructed by a high level natural language specification, namely a prompt, to generate code. View a pdf of the paper titled benchmarking causal study to interpret large language models for source code, by daniel rodriguez cardenas and 4 other authors. This paper introduces a benchmarking strategy named galeras for evaluating the performance of large language models (llms) in software engineering tasks. the strategy includes curated testbeds for code completion, code summarization, and commit generation.

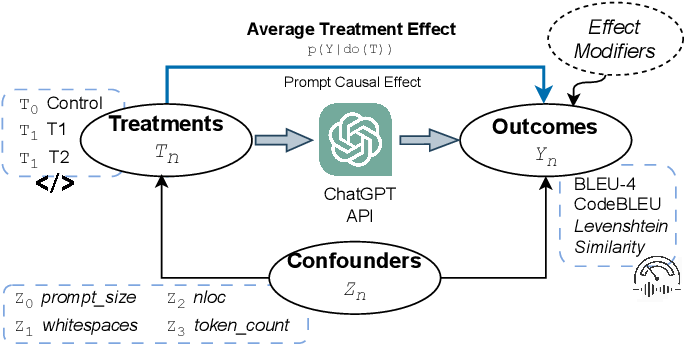

Figure 2 From Benchmarking Causal Study To Interpret Large Language Models For Source Code Numerous benchmarks aim to evaluate the capabilities of large language models (llms) for causal inference and rea soning. however, many of them can likely be solved through the retrieval of domain knowledge, questioning whether they achieve their purpose. While code generation has been widely used in various software development scenarios, the quality of the generated code is not guaranteed. this has been a particular concern in the era of large language models (llm) based code generation, where llms, deemed a complex and powerful black box model, are instructed by a high level natural language specification, namely a prompt, to generate code. View a pdf of the paper titled benchmarking causal study to interpret large language models for source code, by daniel rodriguez cardenas and 4 other authors. This paper introduces a benchmarking strategy named galeras for evaluating the performance of large language models (llms) in software engineering tasks. the strategy includes curated testbeds for code completion, code summarization, and commit generation.

Comments are closed.