Project Optimum Spark Performance At Linkedin Scale

Spark Optimization Pdf Pdf Apache Spark Computing In this talk, we will cover how project optimum is used at linkedin to scale our spark infrastructure while providing a reliable production environment. This blog discusses how we built spark right sizing to automatically tune executor memory for all spark workloads at linkedin to deliver a quicker and more dependable data driven experience.

Performance And Cost Efficient Spark Job Scheduling Based On Deep Reinforcement Learning In In this talk, we will cover how project optimum is used at linkedin to scale our spark infrastructure while providing a reliable production environment. In summary, optimizing at the cluster configuration level involves carefully managing resources and choosing the right cluster manager. this ensures that your spark cluster operates like a. Spark performance tuning is a process to improve the performance of the spark and pyspark applications by adjusting and optimizing system resources (cpu cores and memory), tuning some configurations, and following some framework guidelines and best practices. Discover key apache spark optimization techniques to enhance job performance. learn to debunk misconceptions, optimize code with dataframes and caching, and improve efficiency through configuration and storage level tweaks. includes references for deeper insights.

Github Inpefess Spark Performance Examples Local Performance Comparison Of Pyspark Vs Scala Spark performance tuning is a process to improve the performance of the spark and pyspark applications by adjusting and optimizing system resources (cpu cores and memory), tuning some configurations, and following some framework guidelines and best practices. Discover key apache spark optimization techniques to enhance job performance. learn to debunk misconceptions, optimize code with dataframes and caching, and improve efficiency through configuration and storage level tweaks. includes references for deeper insights. #apachespark #dataengineering #bigdata #databricks #pythonin this exciting session, we will look at spark's automatic performance optimization engine the c. Learn some performance optimization tips to keep in mind when developing your spark applications. By the end of the "high performance data engineering with pyspark" course, you will be able to optimize spark jobs, handle data skew, enforce schema consistency, and work with. By applying these optimization techniques, you’ll transform your spark jobs into high performance pipelines that scale effortlessly, delivering results faster and more efficiently.

Six Efforts To Improve Spark Performance Data Science Association #apachespark #dataengineering #bigdata #databricks #pythonin this exciting session, we will look at spark's automatic performance optimization engine the c. Learn some performance optimization tips to keep in mind when developing your spark applications. By the end of the "high performance data engineering with pyspark" course, you will be able to optimize spark jobs, handle data skew, enforce schema consistency, and work with. By applying these optimization techniques, you’ll transform your spark jobs into high performance pipelines that scale effortlessly, delivering results faster and more efficiently.

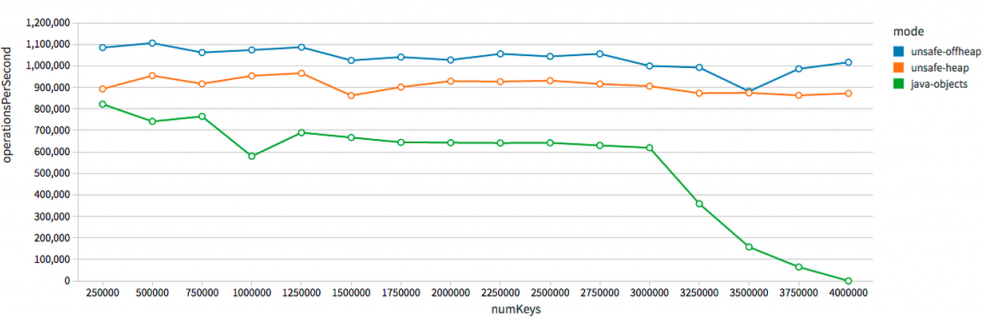

Scalability Of Spark Workloads In Scale Up Configuration Download Scientific Diagram By the end of the "high performance data engineering with pyspark" course, you will be able to optimize spark jobs, handle data skew, enforce schema consistency, and work with. By applying these optimization techniques, you’ll transform your spark jobs into high performance pipelines that scale effortlessly, delivering results faster and more efficiently.

Spark Performance Tuning 5 Ways To Improve Performance Of Spark Applications

Comments are closed.