Run Llms Locally Deep Dive Into Architecture

Run Llms Locally Deep Dive Into Architecture Unlocking local llms: deep dive into architecture. welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language. Welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language models—from their core architecture.

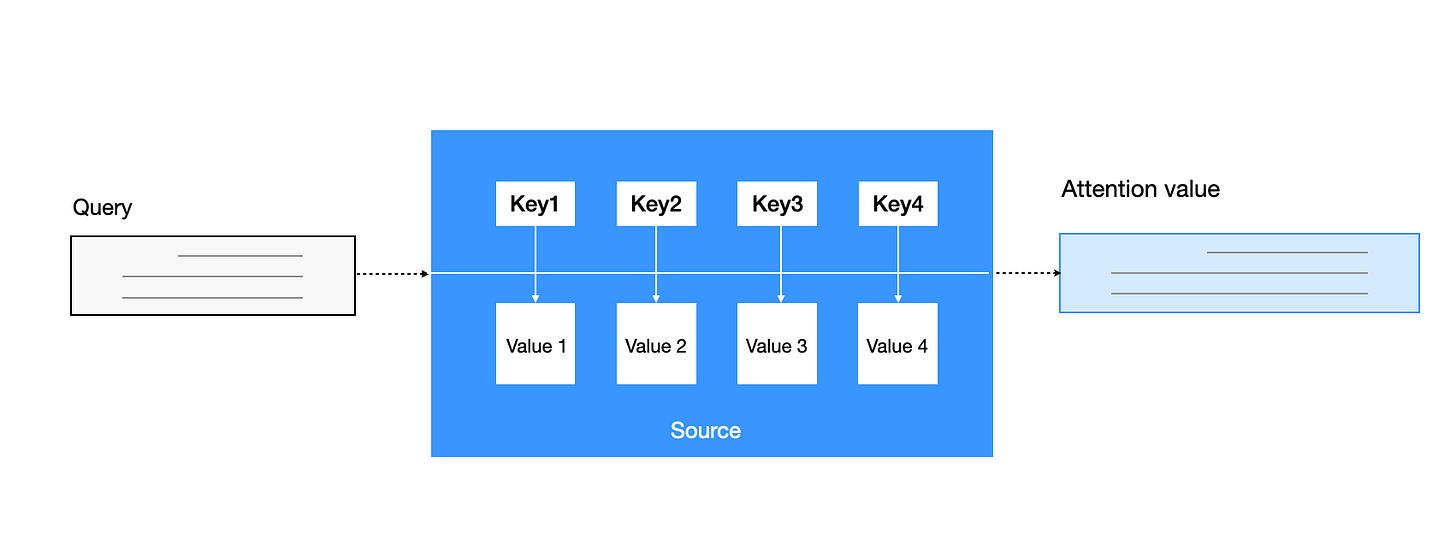

Llms Architecture A Deep Dive Into The Heart Of Large Language Models Running llms locally offers several advantages including privacy, offline access, and cost efficiency. this repository provides step by step guides for setting up and running llms using various frameworks, each with its own strengths and optimization techniques. In this guide, we’ll explore how to run an llm locally, covering hardware requirements, installation steps, model selection, and optimization techniques. whether you’re a researcher, developer, or ai enthusiast, this guide will help you set up and deploy an llm on your local machine efficiently. why run an llm locally?. We’ll show seven ways to run llms locally with gpu acceleration on windows 11, but the methods we cover also work on macos and linux. llm frameworks that help us run llms locally. image by abid ali awan. if you want to learn about llms from scratch, a good place to start is this course on large learning models (llms). [jul 2022] check out our new api for implementation and new topics like generalization in classification and deep learning, resnext, cnn design space, and transformers for vision and large scale pretraining.

Llms Architecture A Deep Dive Into The Heart Of Large Language Models We’ll show seven ways to run llms locally with gpu acceleration on windows 11, but the methods we cover also work on macos and linux. llm frameworks that help us run llms locally. image by abid ali awan. if you want to learn about llms from scratch, a good place to start is this course on large learning models (llms). [jul 2022] check out our new api for implementation and new topics like generalization in classification and deep learning, resnext, cnn design space, and transformers for vision and large scale pretraining. Local llm's can you run them and why are they useful? this seminar is designed to introduce participants to the process of running a large language model (llm) locally on their own machines with ollama and openwebui. We imagine gigantic servers chugging away somewhere in a top secret data center, not something we could possibly run on our personal devices. but here’s the twist: you can run llms locally . Here in this guide, you will learn the step by step process to run any llm models chatgpt, deepseek, and others, locally. this guide covers three proven methods to install llm models locally on mac, windows, or linux. so, choose the method that suits your workflow and hardware. Local execution of llms lets you experiment with models on your own hardware without sending sensitive data to external servers. two popular approaches include: ollama: a containerized, cli driven tool that abstracts model management and provides quick setup for interactive testing.

Deep Dive Into Co Pilots Understanding Architecture Llms And Advanced Concepts Ai Modern Local llm's can you run them and why are they useful? this seminar is designed to introduce participants to the process of running a large language model (llm) locally on their own machines with ollama and openwebui. We imagine gigantic servers chugging away somewhere in a top secret data center, not something we could possibly run on our personal devices. but here’s the twist: you can run llms locally . Here in this guide, you will learn the step by step process to run any llm models chatgpt, deepseek, and others, locally. this guide covers three proven methods to install llm models locally on mac, windows, or linux. so, choose the method that suits your workflow and hardware. Local execution of llms lets you experiment with models on your own hardware without sending sensitive data to external servers. two popular approaches include: ollama: a containerized, cli driven tool that abstracts model management and provides quick setup for interactive testing.

Comments are closed.