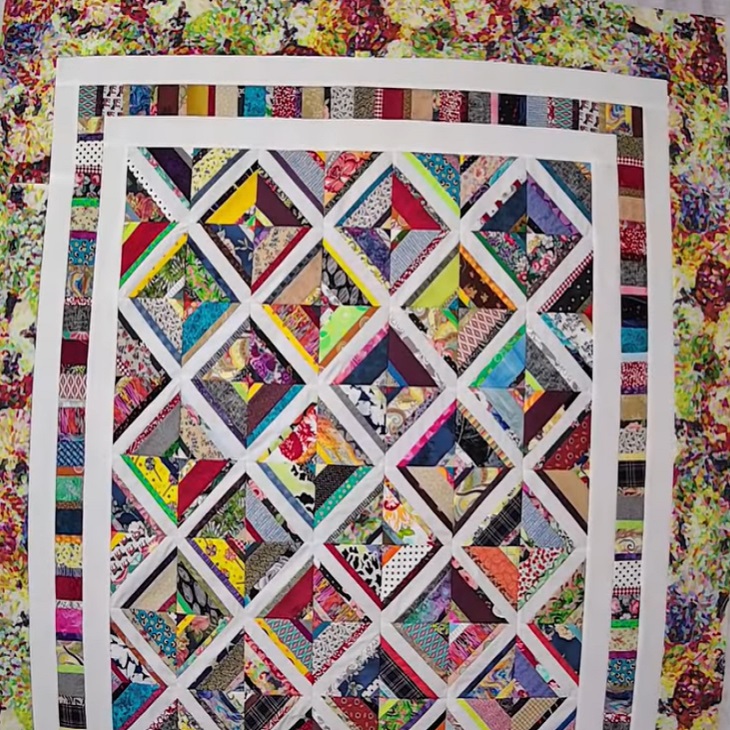

Scrappy Strips Quilt

Scrappy Strips Quilt Along Scrap Fabric Love Open source framework for efficient web scraping and data extraction. Scrapy 2.13 documentation scrapy is a fast high level web crawling and web scraping framework, used to crawl websites and extract structured data from their pages. it can be used for a wide range of purposes, from data mining to monitoring and automated testing. getting help having trouble? we’d like to help! try the faq – it’s got answers to some common questions. looking for specific.

Scrappy Strips Galore Quilt Revelation Quilts Scrapy tutorial in this tutorial, we’ll assume that scrapy is already installed on your system. if that’s not the case, see installation guide. we are going to scrape quotes.toscrape , a website that lists quotes from famous authors. this tutorial will walk you through these tasks: creating a new scrapy project writing a spider to crawl a site and extract data exporting the scraped data. Scrapy at a glance scrapy ( ˈskreɪpaɪ ) is an application framework for crawling web sites and extracting structured data which can be used for a wide range of useful applications, like data mining, information processing or historical archival. even though scrapy was originally designed for web scraping, it can also be used to extract data using apis (such as amazon associates web services. Download the latest stable release of scrapy and start your web scraping journey today. Command line tool scrapy is controlled through the scrapy command line tool, to be referred to here as the “scrapy tool” to differentiate it from the sub commands, which we just call “commands” or “scrapy commands”. the scrapy tool provides several commands, for multiple purposes, and each one accepts a different set of arguments and options. (the scrapy deploy command has been.

Scrappy Strips Quilt Along Scrap Fabric Love Download the latest stable release of scrapy and start your web scraping journey today. Command line tool scrapy is controlled through the scrapy command line tool, to be referred to here as the “scrapy tool” to differentiate it from the sub commands, which we just call “commands” or “scrapy commands”. the scrapy tool provides several commands, for multiple purposes, and each one accepts a different set of arguments and options. (the scrapy deploy command has been. Explore essential resources for scrapy developers, including official documentation to help you master web scraping from setup to large scale deployment. Alternatively, if you’re already familiar with installation of python packages, you can install scrapy and its dependencies from pypi with:. Built with sphinx using a theme provided by read the docs. dark theme provided by mrdogebro. Spiders spiders are classes which define how a certain site (or a group of sites) will be scraped, including how to perform the crawl (i.e. follow links) and how to extract structured data from their pages (i.e. scraping items). in other words, spiders are the place where you define the custom behaviour for crawling and parsing pages for a particular site (or, in some cases, a group of sites.

Scrappy Strips Quilt Along Scrap Fabric Love Explore essential resources for scrapy developers, including official documentation to help you master web scraping from setup to large scale deployment. Alternatively, if you’re already familiar with installation of python packages, you can install scrapy and its dependencies from pypi with:. Built with sphinx using a theme provided by read the docs. dark theme provided by mrdogebro. Spiders spiders are classes which define how a certain site (or a group of sites) will be scraped, including how to perform the crawl (i.e. follow links) and how to extract structured data from their pages (i.e. scraping items). in other words, spiders are the place where you define the custom behaviour for crawling and parsing pages for a particular site (or, in some cases, a group of sites.

Comments are closed.