Stable Diffusion Depth Map Library Image To U

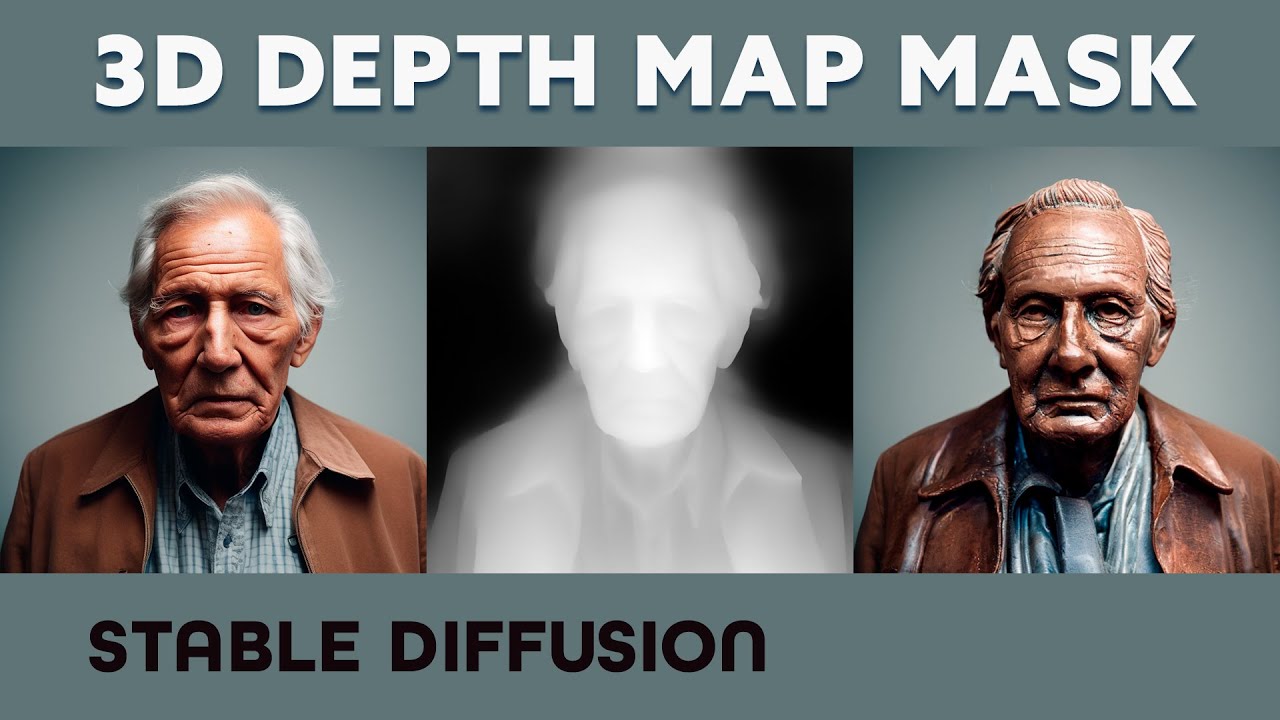

Stable Diffusion Depth Map Library Image To U This script is an addon for automatic1111's stable diffusion webui that creates depth maps, and now also 3d stereo image pairs as side by side or anaglyph from a single image. In depth to image, stable diffusion similarly takes an image and a prompt as inputs. the model first estimates the depth map of the input image using midas, an ai model developed in 2019 for estimating monocular depth perception (that is estimating depth from a single view).

Stable Diffusion Depth Map Library Image To U The stable diffusion model can also infer depth based on an image using midas. this allows you to pass a text prompt and an initial image to condition the generation of new images as well as a depth map to preserve the image structure. Images and depth maps rendered at 704x704 unless otherwise noted. see non sd examples for more, and model comparisons for quality!. The depth to image model, introduced in stable diffusion 2, captivated the imaginations of many with its ability to create realistic depth effects. this model allows users to provide an image as input and generates a corresponding depth map. Once you have an image and its depth map, you can hop on over to depthy in your web browser, upload the image and its depth map, and then create all kinds of 3d effects, including exporting as an animated gif, anaglyph 3d image, lensblur jpg, video, and even adjust the depth map right on screen.

Stable Diffusion Depth Map Library Image To U The depth to image model, introduced in stable diffusion 2, captivated the imaginations of many with its ability to create realistic depth effects. this model allows users to provide an image as input and generates a corresponding depth map. Once you have an image and its depth map, you can hop on over to depthy in your web browser, upload the image and its depth map, and then create all kinds of 3d effects, including exporting as an animated gif, anaglyph 3d image, lensblur jpg, video, and even adjust the depth map right on screen. Depth map library for use with the control net extension for automatic1111 stable diffusion webui. Leverage depth guided stable diffusion to generate images with enhanced depth perception. this method integrates depth maps to guide the stable diffusion model, creating more visually compelling and contextually accurate images. The new version (v2) has come out, and in addition to the standard image to image and text to image modes, it also has a depth image to image that can be incredibly useful. Stable diffusion vw was primarily trained on subsets of laion 2b(en), which consists of images that are limited to english descriptions. texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

Stable Diffusion Depth Map Image To U Depth map library for use with the control net extension for automatic1111 stable diffusion webui. Leverage depth guided stable diffusion to generate images with enhanced depth perception. this method integrates depth maps to guide the stable diffusion model, creating more visually compelling and contextually accurate images. The new version (v2) has come out, and in addition to the standard image to image and text to image modes, it also has a depth image to image that can be incredibly useful. Stable diffusion vw was primarily trained on subsets of laion 2b(en), which consists of images that are limited to english descriptions. texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

Stable Diffusion Depth Map Clio Melody The new version (v2) has come out, and in addition to the standard image to image and text to image modes, it also has a depth image to image that can be incredibly useful. Stable diffusion vw was primarily trained on subsets of laion 2b(en), which consists of images that are limited to english descriptions. texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

Comments are closed.