Meet Swe Perf Benchmarking Llms For Real World Code Performance Optimization The Repository Level

Benchmarking Llms A Deep Dive Into Local Deployment And Performance Optimization To address this, we introduce swe perf, the first benchmark meticulously designed to evaluate llms on performance optimization tasks within genuine, complex repository contexts. Swe perf, introduced by tiktok researchers, is the first benchmark designed to evaluate large language models (llms) on repository level code performance optimization.

Benchmarking Llms Performance Vc Cafe Tiktok’s swe perf sets a new benchmark for testing llms on real world code performance optimization at the repository level—bridging ai and engineering needs. To address this challenge, researchers have introduced swe perf, a new benchmark specifically designed to assess how well llms can optimize code performance within authentic software repositories. To bridge this gap, researchers from tiktok and collaborating institutions have introduced swe perf —the first benchmark specifically designed to evaluate the ability of llms to optimize code performance in real world repositories. To address this gap, we introduce swe perf, the first benchmark specifically designed to systematically evaluate llms on code performance optimization tasks within authentic repository contexts.

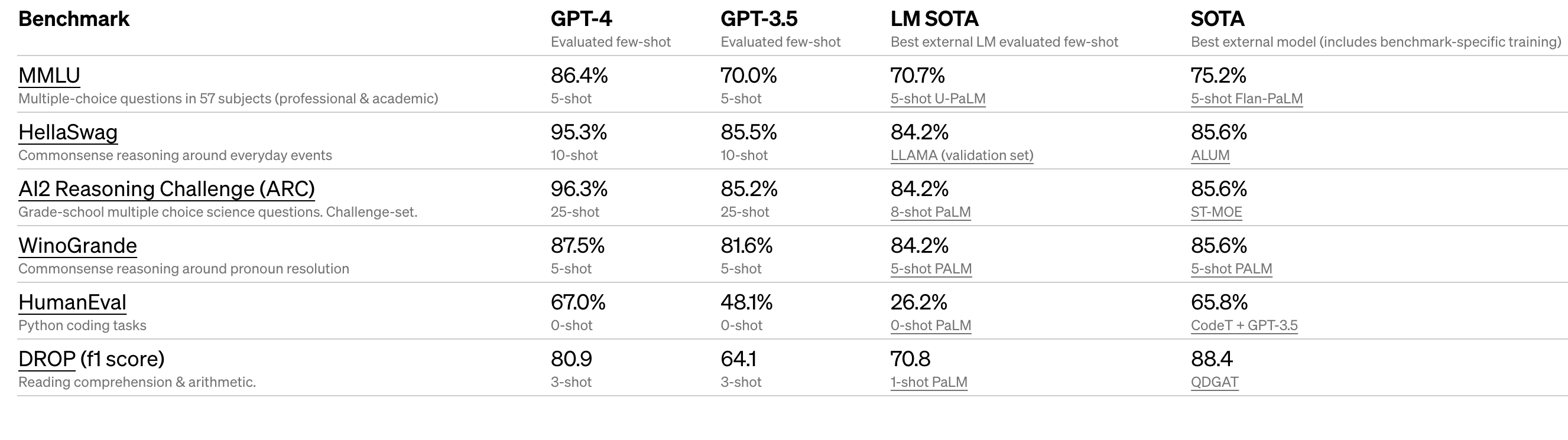

Benchmarking Llms And What Is The Best Llm Msandbu Org To bridge this gap, researchers from tiktok and collaborating institutions have introduced swe perf —the first benchmark specifically designed to evaluate the ability of llms to optimize code performance in real world repositories. To address this gap, we introduce swe perf, the first benchmark specifically designed to systematically evaluate llms on code performance optimization tasks within authentic repository contexts. Traditional benchmarks often focus on isolated tasks, but swe perf captures the intricacies of repository scale performance tuning. by analyzing over 100,000 github pull requests, it provides a robust dataset for measuring llms’ abilities to optimize code effectively. As large language models (llms) are advancing in software engineering tasks – from code generation to bug fixation – performance optimization remains an elusive border, in particular at the level of the repository. Researchers from tiktok and their collaborators have taken a significant step forward by introducing swe perf, the first benchmark specifically designed to assess the performance. Leaderboard for swe perf benchmark showing performance of leading ai models on code optimization tasks.

Comments are closed.