Swe Bench Can Language Models Resolve Real World Github Issues Princeton University

Swe Bench Can Language Models Resolve Real World Github Issues Princeton University Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere $1.96$% of the issues. To this end, we introduce swe bench, an evaluation framework consisting of 2,294 software engineering problems drawn from real github issues and corresponding pull requests across 12 popular python repositories.

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue, a language model is tasked with generating a patch that resolves the described problem. to access swe bench, copy and run the following code: swe bench uses docker for reproducible evaluations. We evaluate state of the art lm systems on swe bench and find that they largely struggle to generate functional and well integrated solutions to real issues. further, we release a training dataset and finetuned version of codellama (swe llama) to promote open research in this domain. Swe bench tests ai systems' ability to solve github issues. we collect 2,294 task instances by crawling pull requests and issues from 12 popular python repositories. each instance is based on a pull request that (1) is associated with an issue, and (2) modified 1 testing related files. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere 1.96% of the issues.

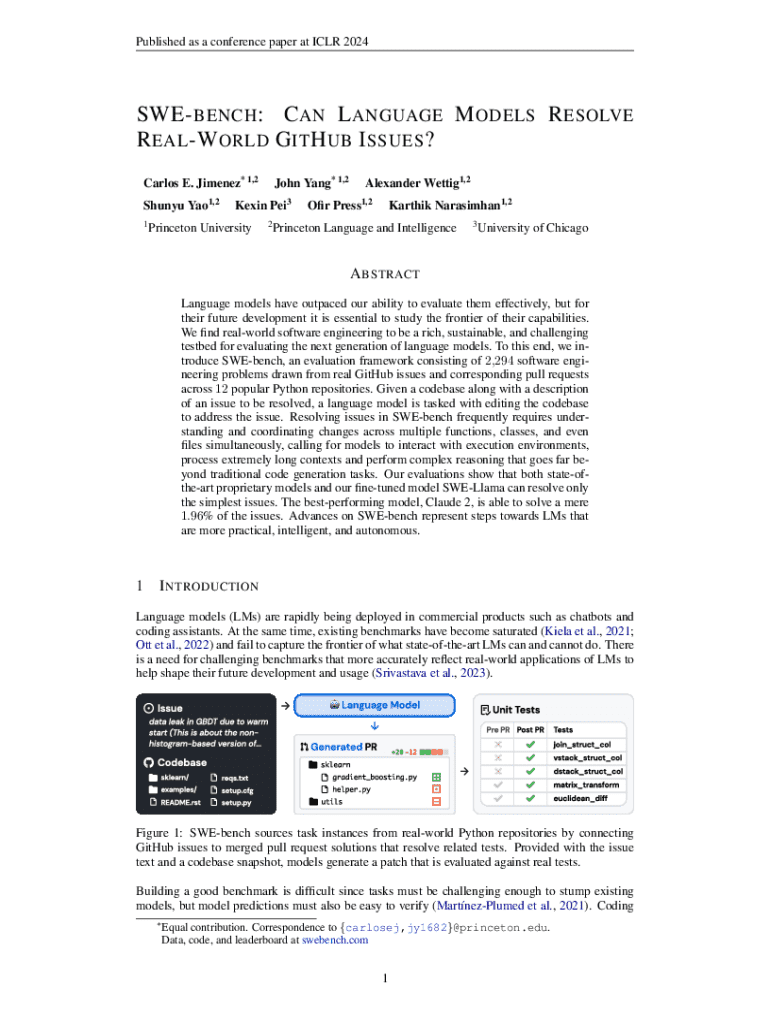

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Swe bench tests ai systems' ability to solve github issues. we collect 2,294 task instances by crawling pull requests and issues from 12 popular python repositories. each instance is based on a pull request that (1) is associated with an issue, and (2) modified 1 testing related files. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere 1.96% of the issues. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. claude 2 and gpt 4 solve a mere 4.8 % and 1.7 % of instances respectively, even when provided with an oracle retriever. Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. This will not advance your issue and will only complicate and extend the time required to address it. thank you for your understanding.

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. claude 2 and gpt 4 solve a mere 4.8 % and 1.7 % of instances respectively, even when provided with an oracle retriever. Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. This will not advance your issue and will only complicate and extend the time required to address it. thank you for your understanding.

Fillable Online Swe Bench An Evaluation Framework For Software Engineering Problems Fax Email Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. This will not advance your issue and will only complicate and extend the time required to address it. thank you for your understanding.

Comments are closed.