Table 1 From Swe Bench Can Language Models Resolve Real World Github Issues Semantic Scholar

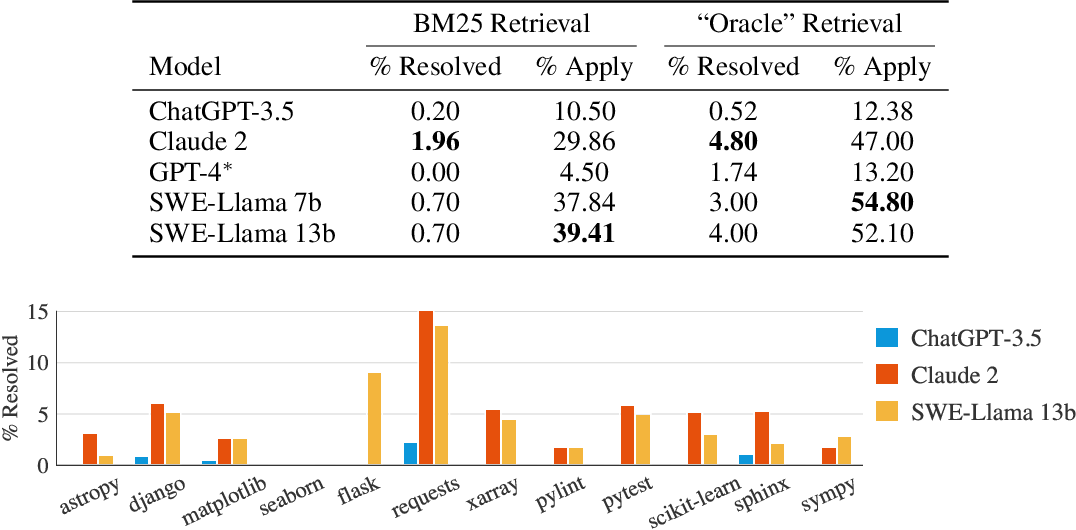

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere $1.96$% of the issues. Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem.

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Table 23: this is another example where swe llama13b solves the task successfully. this example is interesting because the model develops a somewhat novel solution compared to the reference that is arguably more efficient and cleaner. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere 1.96% of the issues. Inspired by this, we introduce swe bench, a benchmark that evaluates lms in a realistic software engineering setting. as shown in figure 1, models are tasked to resolve issues (typically a bug report or a feature request) submitted to popular github repositories. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests.

Swe Bench Can Language Models Resolve Real World Github Issues Princeton Language And Inspired by this, we introduce swe bench, a benchmark that evaluates lms in a realistic software engineering setting. as shown in figure 1, models are tasked to resolve issues (typically a bug report or a feature request) submitted to popular github repositories. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests. Table 7: we compare model performance on task instances from before or after 2023. most models show little difference in performance. ∗due to budget constraints, gpt 4 is evaluated on a 25% random subset of swe bench tasks, which may impact performance here. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere $1.96$% of the issues. Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests.

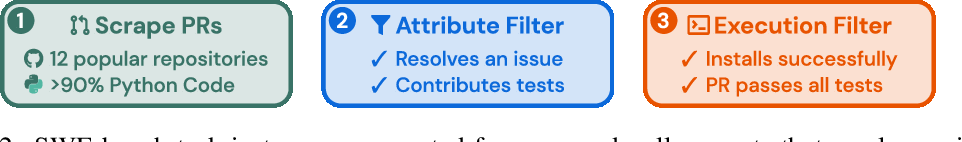

Figure 2 From Swe Bench Can Language Models Resolve Real World Github Issues Semantic Scholar Table 7: we compare model performance on task instances from before or after 2023. most models show little difference in performance. ∗due to budget constraints, gpt 4 is evaluated on a 25% random subset of swe bench tasks, which may impact performance here. Our evaluations show that both state of the art proprietary models and our fine tuned model swe llama can resolve only the simplest issues. the best performing model, claude 2, is able to solve a mere $1.96$% of the issues. Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests.

Figure 2 From Swe Bench Can Language Models Resolve Real World Github Issues Semantic Scholar Swe bench is a benchmark for evaluating large language models on real world software issues collected from github. given a codebase and an issue , a language model is tasked with generating a patch that resolves the described problem. Figure 1: swe bench sources task instances from real world python repositories by connecting github issues to merged pull request solutions that resolve related tests. provided with the issue text and a codebase snapshot, models generate a patch that is evaluated against real tests.

Fillable Online Swe Bench An Evaluation Framework For Software Engineering Problems Fax Email

Comments are closed.