The Definitive Guide To Llm Benchmarking Confident Ai

Llm Ai Cybersecurity And Gobernace Checklist Pdf Artificial Intelligence Intelligence Ai In this article, i'll show how benchmarking can help you choose the right llm for your use case. This includes from synthetic data generation to formatting it into test cases ready for llm evaluation and testing, which you can use in just 2 lines of code. and the best part is, you can leverage any llm of your choice.

Llm Review Pdf Artificial Intelligence Intelligence Ai Semantics In this article, we will debunk how to evaluate an llm application rag pipelines the right way. How does confident ai work? you can get started with llm evaluation and observability in this 5 minutes quickstart guide. confident ai supports evals and tracing for any llm use case, including multi turn ones! these are the main features on confident ai. This article goes through everything on g eval for anyone to easily evaluate llm apps on any task specific criteria. Join our weekly newsletter to stay confident in the ai systems you build. our articles include tutorials, guides, and essays to safely build and evaluate llms.

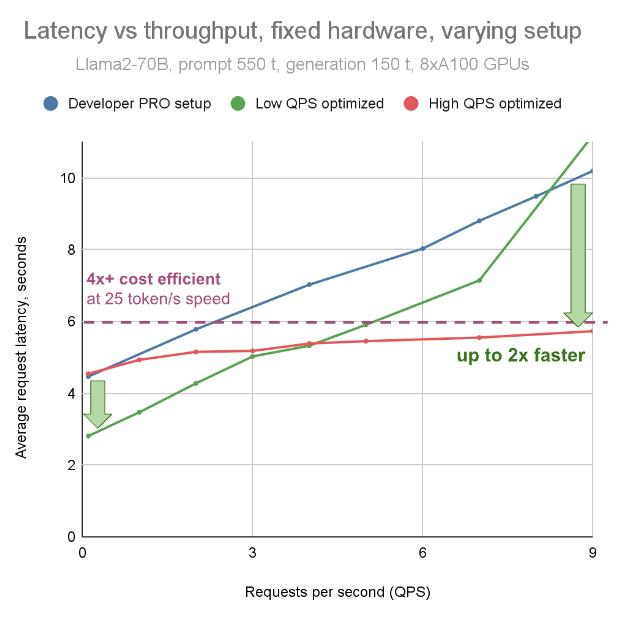

Llm Inference Performance Benchmarking Part 1 This article goes through everything on g eval for anyone to easily evaluate llm apps on any task specific criteria. Join our weekly newsletter to stay confident in the ai systems you build. our articles include tutorials, guides, and essays to safely build and evaluate llms. Confident ai’s evaluation features are second to none and 100% integrated and 100% integrated with deepeval. all the features you’ve seen up to this point in the documentation leads up to the llm evaluation suite. In this ebook, we will delve into how to set this up and make sure it is reliable. while using ai to evaluate ai may sound circular, we have always had human intelligence evaluate human intelligence (for example, at a job interview or your college finals). now ai systems can finally do the same for other ai systems. Identify failing llm test cases 10.1k views • 20 days ago confident ai 100k subscribers. How to make your own benchmark we will discuss at the end of the junior block. in the senior block, we will talk about evaluating workflow and agents.

Llm Benchmarking Confident ai’s evaluation features are second to none and 100% integrated and 100% integrated with deepeval. all the features you’ve seen up to this point in the documentation leads up to the llm evaluation suite. In this ebook, we will delve into how to set this up and make sure it is reliable. while using ai to evaluate ai may sound circular, we have always had human intelligence evaluate human intelligence (for example, at a job interview or your college finals). now ai systems can finally do the same for other ai systems. Identify failing llm test cases 10.1k views • 20 days ago confident ai 100k subscribers. How to make your own benchmark we will discuss at the end of the junior block. in the senior block, we will talk about evaluating workflow and agents.

Simplifying Ai Llm Security Protopia Identify failing llm test cases 10.1k views • 20 days ago confident ai 100k subscribers. How to make your own benchmark we will discuss at the end of the junior block. in the senior block, we will talk about evaluating workflow and agents.

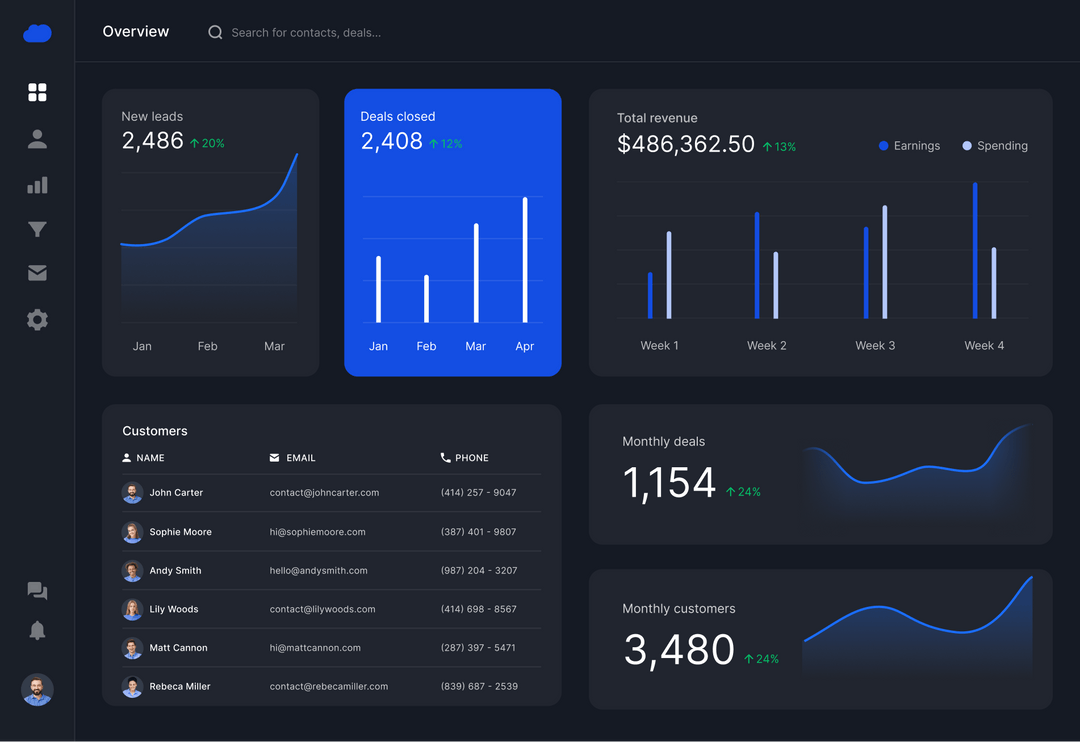

Confident Ai The Deepeval Llm Evaluation Platform

Comments are closed.