Unlocking Local Llms Deep Dive Into Architecture Python App Demo

Unlocking Local Llms Deep Dive Into Architecture Python App Demo Apex Hours Welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language models—from their core architecture. Welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language models—from their core architecture to the nitty‐gritty of inference.

Dive Deep Into Python Software Architecture A Comprehensive Guide Code With C Running llms locally offers several advantages including privacy, offline access, and cost efficiency. this repository provides step by step guides for setting up and running llms using various frameworks, each with its own strengths and optimization techniques. general requirements for running llms locally:. Python tutorial | unlocking local llms: deep dive into architecture & python app demo! tutorial: running ai models locally with ama welcome to this tutorial on running ai models locally with ama. i'm excited to share with you a powerful tool that can help you integrate ai into your applications without relying on cloud based apis. Welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language models—from their core architecture to the nitty‐gritty of inference at a lower level. Don’t panic just yet: we are about to break down and explain some key parts of this code: app = fastapi() creates the web api fueled by a rest service that, once the python program is executed, will start listening and serving requests (prompts) using a local llm. class prompt(basemodel): and prompt: str create the json input schema whereby we can introduce the prompt for the llm.

Setup Local Llms Applied Python Training Welcome to the ultimate guide on running llms locally! in this video, we break down the inner workings of large language models—from their core architecture to the nitty‐gritty of inference at a lower level. Don’t panic just yet: we are about to break down and explain some key parts of this code: app = fastapi() creates the web api fueled by a rest service that, once the python program is executed, will start listening and serving requests (prompts) using a local llm. class prompt(basemodel): and prompt: str create the json input schema whereby we can introduce the prompt for the llm. Large language models (llms) like gpt 4, llama, and falcon have revolutionized ai applications, enabling advanced capabilities in natural language processing (nlp). this article provides an in. This repository contains expanded tutorials and python notebooks to accompany the textbook large language models: a deep dive, by kamath, keenan, somers, and sorenson. Foundry local is a set of development tools designed to help you build and evaluate llm applications on your local machine. it provides a curated collection of production quality tools, including evaluation and prompt engineering capabilities, that are fully compatible with azure ai. This report provides an expert level deep dive into the model context protocol. it explores mcp’s architecture, operational workflow, and its practical integration with local ollama instances.

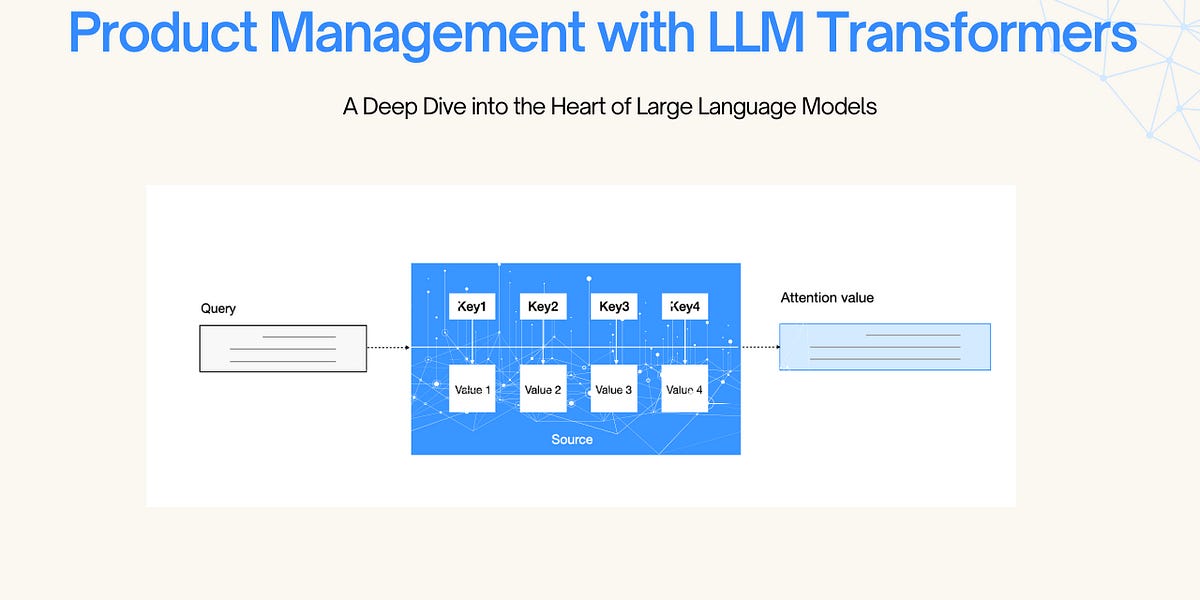

Llms Architecture A Deep Dive Into The Heart Of Large Language Models Large language models (llms) like gpt 4, llama, and falcon have revolutionized ai applications, enabling advanced capabilities in natural language processing (nlp). this article provides an in. This repository contains expanded tutorials and python notebooks to accompany the textbook large language models: a deep dive, by kamath, keenan, somers, and sorenson. Foundry local is a set of development tools designed to help you build and evaluate llm applications on your local machine. it provides a curated collection of production quality tools, including evaluation and prompt engineering capabilities, that are fully compatible with azure ai. This report provides an expert level deep dive into the model context protocol. it explores mcp’s architecture, operational workflow, and its practical integration with local ollama instances.

Github Springer Llms Deep Dive Llms Deep Dive Tutorials Foundry local is a set of development tools designed to help you build and evaluate llm applications on your local machine. it provides a curated collection of production quality tools, including evaluation and prompt engineering capabilities, that are fully compatible with azure ai. This report provides an expert level deep dive into the model context protocol. it explores mcp’s architecture, operational workflow, and its practical integration with local ollama instances.

Comments are closed.