Upstream Prematurely Closed Connection While Reading Response Header A I Support Nextcloud

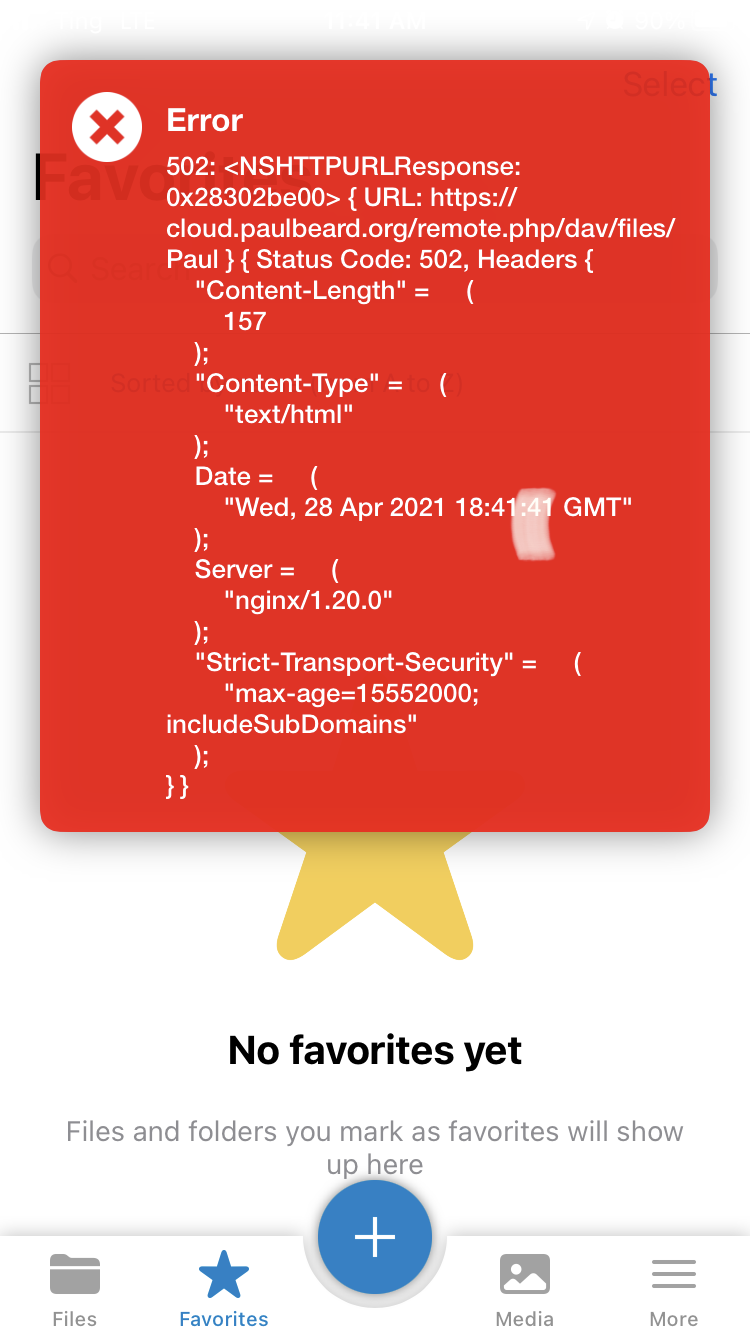

Upstream Prematurely Closed Connection While Reading Response Header â ï Support Nextcloud So i only updated the http part, and now i meet the error 502 bad gateway and when i display var log nginx error.log i got the famous "upstream prematurely closed connection while reading response header from upstream". This just occurred in the past day or so. none of the config here is exceptional in any way and it used to work just fine. other php based services work just fine, with authentication: wordpress and cacti net management. so this is specific to nextcloud, not my setup config.

How To Fix Nginx Upstream Prematurely Closed Connection While Reading Response Header From Learn how to resolve the "nginx upstream prematurely closed connection while reading response header from upstream" error. this guide provides practical solutions to fix this common nginx issue. It started when we enabled tls 1.3 from nginx to upstreams. sign up for free to join this conversation on github. 当遇到 `upstream prematurely closed connection while reading response header from upstream` 错误时,这通常意味着 nginx 尝试从上游服务器获取响应头时,上游服务器意外终止了连接[^1]。此问题可能由多种因素. I traced one of those error sources to the nginx error log saying "upstream prematurely closed fastcgi stdout while reading response header from upstream". what would be the easiest way to debug what is causing these random events?.

Upstream Prematurely Closed Connection While Reading Upstream Causes And Solutions 当遇到 `upstream prematurely closed connection while reading response header from upstream` 错误时,这通常意味着 nginx 尝试从上游服务器获取响应头时,上游服务器意外终止了连接[^1]。此问题可能由多种因素. I traced one of those error sources to the nginx error log saying "upstream prematurely closed fastcgi stdout while reading response header from upstream". what would be the easiest way to debug what is causing these random events?. Upstream prematurely closed connection while reading response header from upstream hi team, i have deployed an opensearch cluster and am trying to access the service via ingress. however, i am getting an error in the nginx ingress pod logs. We have external nginx proxy. and we tested our site from different ips. the whole http trafic send to nginx proxy and proxy send trafic to kubernetes nodeport (we do not use ingress). proxy has upstream that point to 2 ips kube master. Here is the working uwsgi configuration autoboot ini file that solved the timeout issue and thus the 502 gateway issue (upstream closed prematurely). autoboot.ini. After having spent hours to detect the issue, i stumbled upon the following error: 2024 04 10 09:13:00 [error] 3980#3980: *29815576 upstream prematurely closed connection while reading response header from upstream. with that information at hand, i skimmed countless webpages to identify the root cause.

Understanding And Fixing Upstream Prematurely Closed Connection In Nginx Akmatori Blog Upstream prematurely closed connection while reading response header from upstream hi team, i have deployed an opensearch cluster and am trying to access the service via ingress. however, i am getting an error in the nginx ingress pod logs. We have external nginx proxy. and we tested our site from different ips. the whole http trafic send to nginx proxy and proxy send trafic to kubernetes nodeport (we do not use ingress). proxy has upstream that point to 2 ips kube master. Here is the working uwsgi configuration autoboot ini file that solved the timeout issue and thus the 502 gateway issue (upstream closed prematurely). autoboot.ini. After having spent hours to detect the issue, i stumbled upon the following error: 2024 04 10 09:13:00 [error] 3980#3980: *29815576 upstream prematurely closed connection while reading response header from upstream. with that information at hand, i skimmed countless webpages to identify the root cause.

Comments are closed.