Which Llm Should I Use Evaluating Llms For Tasks Performed By Undergraduate Computer Science

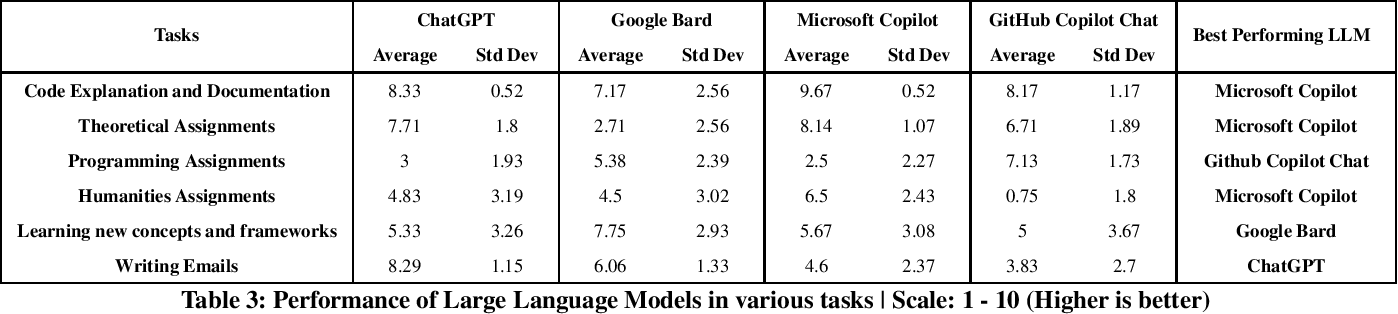

Which Llm Should I Use Evaluating Llms For Tasks Performed By Undergraduate Computer Science Our research systematically assesses some of the publicly available llms such as google bard, chatgpt (3.5), github copilot chat, and microsoft copilot across diverse tasks commonly encountered by undergraduate computer science students in india. This study aims to guide students as well as instructors in selecting suitable llms for any specific task and offers valuable insights on how llms can be used constructively by students and instructors.

Which Llm Should I Use Evaluating Llms For Tasks Performed By Undergraduate Computer Science Selecting the best large language model for your use case requires balancing performance, cost and infrastructure considerations. learn what to keep in mind when comparing llms. Choosing the right llm model for your organization is a strategic decision that can have a profound impact on your ability to harness the power of ai in natural language processing tasks. Evaluating llms is crucial to identifying potential risks, analyzing how these models interact with humans, determining their capabilities and limitations for specific tasks, and ensuring that their training progresses effectively. It does so by reviewing the top industry practices for assessing large language models (llms) and their applications.

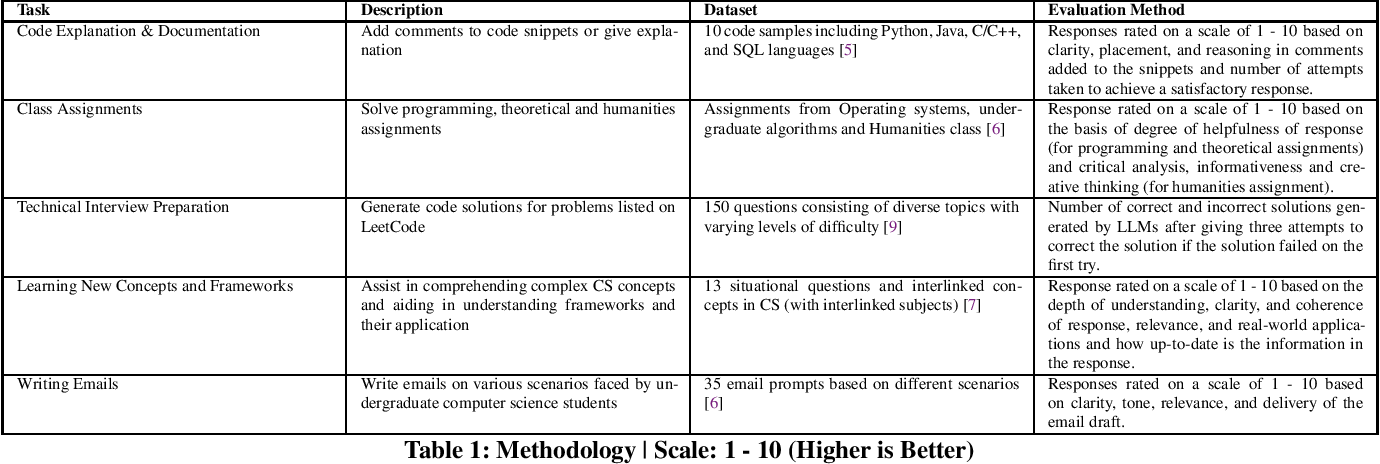

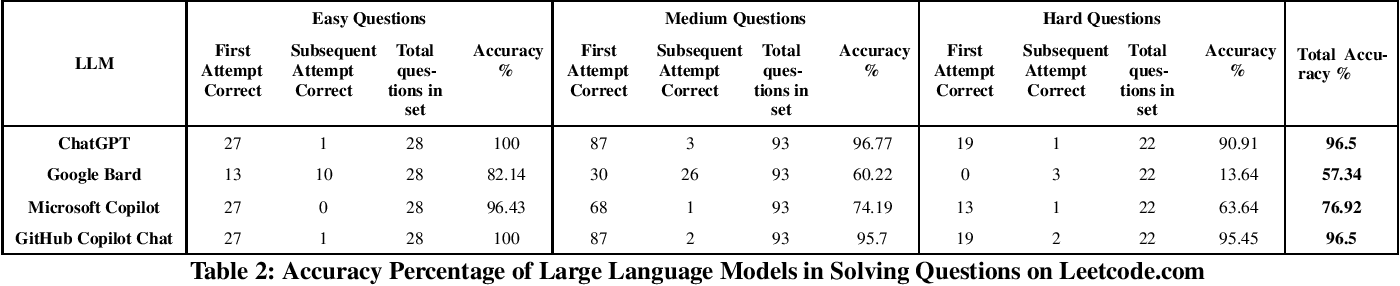

Which Llm Should I Use Evaluating Llms For Tasks Performed By Undergraduate Computer Science Evaluating llms is crucial to identifying potential risks, analyzing how these models interact with humans, determining their capabilities and limitations for specific tasks, and ensuring that their training progresses effectively. It does so by reviewing the top industry practices for assessing large language models (llms) and their applications. We each have personal experiences with different models, and many folks use different models for different tasks — bard is better for analysis & synthesis, claude for code generating, and gpt for general knowledge, and so on. Recently, llms themselves have been used to evaluate llms on unstructured tasks. the idea is to ask a second llm to rate the quality of the first llm’s response using a pre defined criterion. in its simplest form, the second llm is asked to classify the first llm’s response as good or bad. The pace of advancement of large language models (llms) motivates the use of existing infrastructure to automate the evaluation of llm performance on computing education tasks. concept inventories are well suited for evaluation because of their careful design and prior validity evidence. Table 2: accuracy percentage of large language models in solving questions on leetcode ""which llm should i use?": evaluating llms for tasks performed by undergraduate computer science students in india".

Comments are closed.